Datasets:

dataset_info:

features:

- name: image

dtype: image

- name: filename

dtype: string

- name: is_negative

dtype: bool

- name: corner_tl_x

dtype: float32

- name: corner_tl_y

dtype: float32

- name: corner_tr_x

dtype: float32

- name: corner_tr_y

dtype: float32

- name: corner_br_x

dtype: float32

- name: corner_br_y

dtype: float32

- name: corner_bl_x

dtype: float32

- name: corner_bl_y

dtype: float32

splits:

- name: train

num_examples: 32968

- name: validation

num_examples: 8645

- name: test

num_examples: 6652

configs:

- config_name: default

data_files:

- split: train

path: train/*.parquet

- split: validation

path: val/*.parquet

- split: test

path: test/*.parquet

license: other

task_categories:

- image-segmentation

- keypoint-detection

- object-detection

tags:

- document-detection

- corner-detection

- perspective-correction

- document-scanner

- keypoint-regression

language:

- en

size_categories:

- 10K<n<100K

DocCornerDataset

A high-quality document corner detection dataset for training models to detect the four corners of documents in images. This dataset is optimized for building robust document scanning and perspective correction applications.

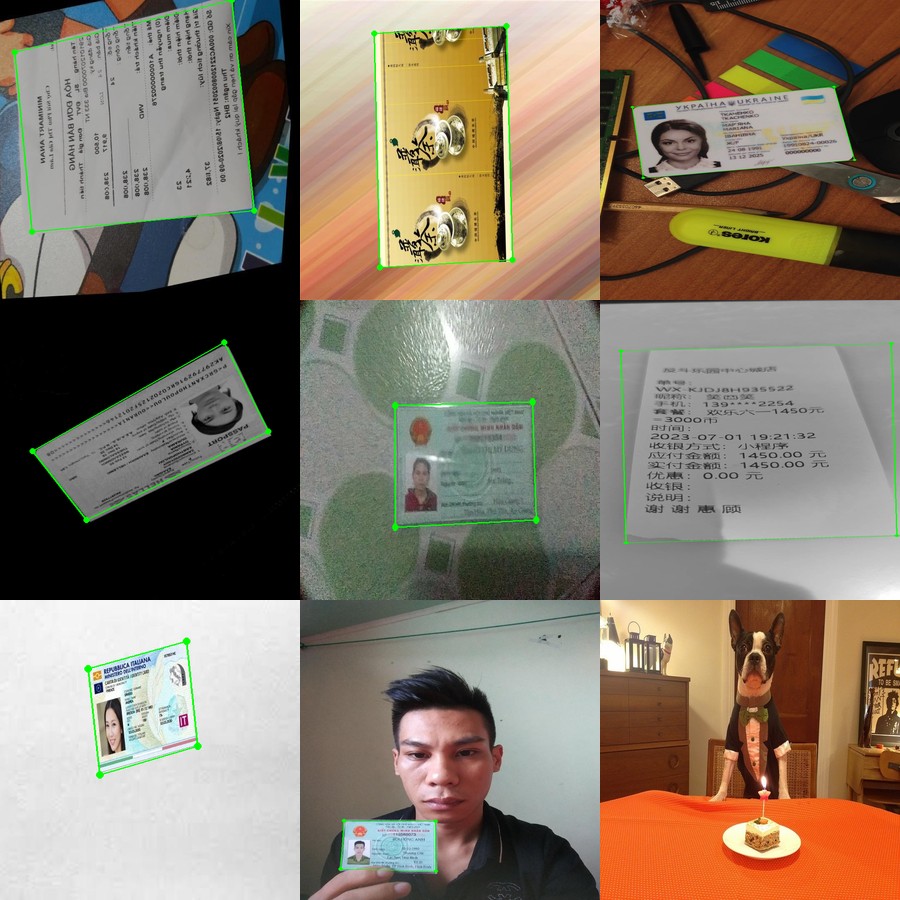

Dataset Examples

Training Set

Validation Set

Test Set

Green polygons show the annotated document corners

Dataset Description

This dataset contains images with document corner annotations, optimized for training robust document detection models. It uses the best-performing splits from an iterative dataset cleaning process with multiple quality validation steps.

Key Features

- High Quality Annotations: Labels refined through iterative cleaning with multiple teacher models

- Diverse Document Types: IDs, invoices, receipts, books, cards, and general documents

- Negative Samples: Includes images without documents for training robust classifiers

- No Overlap: Train, validation, and test splits are completely disjoint

Dataset Statistics

| Split | Images | Description |

|---|---|---|

train |

32,968 | Training set (cleaned iter3 + hard negatives) |

validation |

8,645 | Validation set (cleaned iter3) |

test |

6,652 | Held-out test set (no overlap with train/val) |

| Total | 48,265 |

Data Sources and Licenses

This dataset is compiled from multiple open-source datasets. Please refer to the original dataset licenses before using this data.

MIDV Dataset (ID Cards)

Mobile Identity Document Video dataset for identity document detection and recognition.

| Dataset | Images | License | Source |

|---|---|---|---|

| MIDV-500 | ~9,400 | Research use | Website |

| MIDV-2019 | ~1,350 | Research use | Website |

Citation:

@article{arlazarov2019midv500,

title={MIDV-500: A Dataset for Identity Documents Analysis and Recognition on Mobile Devices in Video Stream},

author={Arlazarov, V.V. and Bulatov, K. and Chernov, T. and Arlazarov, V.L.},

journal={Computer Optics},

volume={43},

number={5},

pages={818--824},

year={2019}

}

@inproceedings{arlazarov2019midv2019,

title={MIDV-2019: Challenges of the modern mobile-based document OCR},

author={Arlazarov, V.V. and Bulatov, K. and Chernov, T. and Arlazarov, V.L.},

booktitle={ICDAR},

year={2019}

}

SmartDoc Dataset (Documents)

SmartDoc Challenge dataset for document image acquisition and quality assessment.

| Dataset | Images | License | Source |

|---|---|---|---|

| SmartDoc | ~1,380 | Research use | Website |

Citation:

@inproceedings{burie2015smartdoc,

title={ICDAR 2015 Competition on Smartphone Document Capture and OCR (SmartDoc)},

author={Burie, J.C. and Chazalon, J. and Coustaty, M. and others},

booktitle={ICDAR},

year={2015}

}

COCO Dataset (Negative Samples)

Common Objects in Context dataset used for negative samples (images without documents).

| Dataset | Images | License | Source |

|---|---|---|---|

| COCO val2017 | ~4,300 | CC BY 4.0 | Website |

| COCO train2017 | ~11,400 | CC BY 4.0 | Website |

Note: Excluded categories that could be confused with documents: book, laptop, tv, cell phone, keyboard, mouse, remote, clock.

Citation:

@inproceedings{lin2014coco,

title={Microsoft COCO: Common Objects in Context},

author={Lin, Tsung-Yi and Maire, Michael and Belongie, Serge and others},

booktitle={ECCV},

year={2014}

}

Roboflow Universe (Various Documents)

Various document datasets from Roboflow Universe community.

| Category | Datasets | License | Source |

|---|---|---|---|

| Documents | document_segmentation_v2, doc_scanner, doc_rida, documento | Various (check individual) | Roboflow Universe |

| Bills/Invoices | bill_segmentation, cs_invoice | Various (check individual) | Roboflow Universe |

| Receipts | receipt_detection, receipt_occam, receipts_coolstuff | Various (check individual) | Roboflow Universe |

| ID Cards | card_corner, card_4_class, id_card_skew, id_detections, idcard_jj | Various (check individual) | Roboflow Universe |

| Passports | segment_passport | Various (check individual) | Roboflow Universe |

| Books | book_reader, page_segmentation_tecgp, book_cmjt2 | Various (check individual) | Roboflow Universe |

Note: Roboflow datasets have various licenses. Please check the individual dataset pages on Roboflow Universe for specific license terms.

Features

| Feature | Type | Description |

|---|---|---|

image |

Image | The document image (JPEG) |

filename |

string | Original filename for traceability |

is_negative |

bool | True if image contains no document |

corner_tl_x |

float32 | Top-left corner X coordinate (normalized 0-1) |

corner_tl_y |

float32 | Top-left corner Y coordinate (normalized 0-1) |

corner_tr_x |

float32 | Top-right corner X coordinate (normalized 0-1) |

corner_tr_y |

float32 | Top-right corner Y coordinate (normalized 0-1) |

corner_br_x |

float32 | Bottom-right corner X coordinate (normalized 0-1) |

corner_br_y |

float32 | Bottom-right corner Y coordinate (normalized 0-1) |

corner_bl_x |

float32 | Bottom-left corner X coordinate (normalized 0-1) |

corner_bl_y |

float32 | Bottom-left corner Y coordinate (normalized 0-1) |

Corner Order

Corners are ordered clockwise starting from top-left:

1 (TL) -------- 2 (TR)

| |

| Document |

| |

4 (BL) -------- 3 (BR)

Coordinate System

- Coordinates are normalized to the range [0, 1]

- To convert to pixel coordinates:

pixel_x = corner_x * image_width - Origin (0, 0) is at the top-left of the image

Negative Samples

Images with is_negative=True:

- Do not contain any document

- All corner coordinates are

null - Useful for training classifiers to reject non-document images

Usage

Loading the Dataset

from datasets import load_dataset

# Load all splits

dataset = load_dataset("mapo80/DocCornerDataset")

# Access specific splits

train_data = dataset["train"]

val_data = dataset["validation"]

test_data = dataset["test"]

print(f"Train: {len(train_data)} samples")

print(f"Val: {len(val_data)} samples")

print(f"Test: {len(test_data)} samples")

Iterating Over Samples

for sample in dataset["train"]:

image = sample["image"] # PIL Image

filename = sample["filename"]

if not sample["is_negative"]:

# Get corner coordinates (normalized 0-1)

corners = [

(sample["corner_tl_x"], sample["corner_tl_y"]),

(sample["corner_tr_x"], sample["corner_tr_y"]),

(sample["corner_br_x"], sample["corner_br_y"]),

(sample["corner_bl_x"], sample["corner_bl_y"]),

]

# Convert to pixel coordinates

w, h = image.size

corners_px = [(int(x * w), int(y * h)) for x, y in corners]

Visualizing Annotations

from PIL import Image, ImageDraw

def draw_corners(image, corners, color=(0, 255, 0), width=3):

"""Draw document corners on image."""

draw = ImageDraw.Draw(image)

w, h = image.size

# Convert normalized to pixel coords

points = [(int(c[0] * w), int(c[1] * h)) for c in corners]

# Draw polygon

for i in range(4):

draw.line([points[i], points[(i+1) % 4]], fill=color, width=width)

# Draw corner circles

for p in points:

r = 5

draw.ellipse([p[0]-r, p[1]-r, p[0]+r, p[1]+r], fill=color)

return image

# Example usage

sample = dataset["train"][0]

if not sample["is_negative"]:

corners = [

(sample["corner_tl_x"], sample["corner_tl_y"]),

(sample["corner_tr_x"], sample["corner_tr_y"]),

(sample["corner_br_x"], sample["corner_br_y"]),

(sample["corner_bl_x"], sample["corner_bl_y"]),

]

annotated = draw_corners(sample["image"].copy(), corners)

annotated.show()

Training a Model (PyTorch Example)

import torch

from torch.utils.data import DataLoader

from datasets import load_dataset

dataset = load_dataset("mapo80/DocCornerDataset")

def collate_fn(batch):

images = torch.stack([transform(s["image"]) for s in batch])

# Stack corner coordinates (8 values per sample)

corners = []

for s in batch:

if s["is_negative"]:

corners.append(torch.zeros(8))

else:

corners.append(torch.tensor([

s["corner_tl_x"], s["corner_tl_y"],

s["corner_tr_x"], s["corner_tr_y"],

s["corner_br_x"], s["corner_br_y"],

s["corner_bl_x"], s["corner_bl_y"],

]))

return images, torch.stack(corners)

train_loader = DataLoader(

dataset["train"],

batch_size=32,

shuffle=True,

collate_fn=collate_fn

)

Model Performance

Models trained on this dataset achieve the following performance:

| Model | Input Size | mIoU (val) | mIoU (test) |

|---|---|---|---|

| MobileNetV2 (alpha=0.35) | 224x224 | 0.9894 | 0.9826 |

| MobileNetV2 (alpha=0.35) | 256x256 | 0.9902 | 0.9819 |

mIoU = Mean Intersection over Union between predicted and ground truth quadrilaterals

Citation

If you use this dataset in your research, please cite this dataset and the original source datasets:

@dataset{doccornerdataset2025,

author = {mapo80},

title = {DocCornerDataset: Document Corner Detection Dataset},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/datasets/mapo80/DocCornerDataset}

}

Please also cite the original datasets used:

- MIDV-500/MIDV-2019 (Arlazarov et al., 2019)

- SmartDoc (Burie et al., 2015)

- COCO (Lin et al., 2014)

License

⚠️ This dataset is compiled from multiple sources with different licenses.

| Source | License |

|---|---|

| MIDV-500/MIDV-2019 | Research use only |

| SmartDoc | Research use only |

| COCO | CC BY 4.0 |

| Roboflow datasets | Various (check individual datasets) |

Before using this dataset, please review the licenses of the original datasets:

- MIDV-500

- MIDV-2019

- SmartDoc

- COCO

- Roboflow Universe (check individual datasets)

Acknowledgments

This dataset was created by combining and processing multiple open-source datasets. We thank the authors of MIDV, SmartDoc, COCO, and the Roboflow community for making their data available.

Related Projects

- DocCornerNet - Document corner detection model trained on this dataset