repo

stringclasses 2

values | pull_number

int64 91

3.45k

| instance_id

stringclasses 2

values | issue_numbers

listlengths 1

1

| base_commit

stringclasses 2

values | patch

stringclasses 2

values | test_patch

stringclasses 2

values | problem_statement

stringclasses 2

values | hints_text

stringclasses 2

values | all_hints_text

stringclasses 2

values | created_at

timestamp[s]date 2021-06-16 12:57:18

2022-10-20 18:40:18

| file_path

stringclasses 1

value | score

int64 3

5

| PASS_TO_PASS

listlengths 1

1

| FAIL_TO_PASS

listlengths 1

1

| version

stringclasses 1

value | environment_setup_commit

stringclasses 1

value |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

dgtlmoon/changedetection.io

| 91

|

dgtlmoon__changedetection.io-91

|

[

76

] |

c0b623391252152311ff44be5884bc5429068ec2

|

diff --git a/backend/__init__.py b/backend/__init__.py

index 3c9b650e97f..b1653007026 100644

--- a/backend/__init__.py

+++ b/backend/__init__.py

@@ -468,11 +468,12 @@ def settings_page():

form.notification_body.data = datastore.data['settings']['application']['notification_body']

# Password unset is a GET

- if request.values.get('removepassword') == 'true':

+ if request.values.get('removepassword') == 'yes':

from pathlib import Path

datastore.data['settings']['application']['password'] = False

flash("Password protection removed.", 'notice')

flask_login.logout_user()

+ return redirect(url_for('settings_page'))

if request.method == 'POST' and form.validate():

@@ -577,7 +578,7 @@ def diff_history_page(uuid):

if uuid == 'first':

uuid = list(datastore.data['watching'].keys()).pop()

- extra_stylesheets = ['/static/styles/diff.css']

+ extra_stylesheets = [url_for('static_content', group='styles', filename='diff.css')]

try:

watch = datastore.data['watching'][uuid]

except KeyError:

@@ -634,7 +635,7 @@ def preview_page(uuid):

if uuid == 'first':

uuid = list(datastore.data['watching'].keys()).pop()

- extra_stylesheets = ['/static/styles/diff.css']

+ extra_stylesheets = [url_for('static_content', group='styles', filename='diff.css')]

try:

watch = datastore.data['watching'][uuid]

diff --git a/backend/templates/base.html b/backend/templates/base.html

index cc53ac6971f..649b204e322 100644

--- a/backend/templates/base.html

+++ b/backend/templates/base.html

@@ -5,8 +5,8 @@

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta name="description" content="Self hosted website change detection.">

<title>Change Detection{{extra_title}}</title>

- <link rel="stylesheet" href="/static/styles/pure-min.css">

- <link rel="stylesheet" href="/static/styles/styles.css?ver=1000">

+ <link rel="stylesheet" href="{{url_for('static_content', group='styles', filename='pure-min.css')}}">

+ <link rel="stylesheet" href="{{url_for('static_content', group='styles', filename='styles.css')}}">

{% if extra_stylesheets %}

{% for m in extra_stylesheets %}

<link rel="stylesheet" href="{{ m }}?ver=1000">

@@ -21,7 +21,7 @@

{% if has_password and not current_user.is_authenticated %}

<a class="pure-menu-heading" href="https://github.com/dgtlmoon/changedetection.io" rel="noopener"><strong>Change</strong>Detection.io</a>

{% else %}

- <a class="pure-menu-heading" href="/"><strong>Change</strong>Detection.io</a>

+ <a class="pure-menu-heading" href="{{url_for('index')}}"><strong>Change</strong>Detection.io</a>

{% endif %}

{% if current_diff_url %}

<a class=current-diff-url href="{{ current_diff_url }}"><span style="max-width: 30%; overflow: hidden;">{{ current_diff_url }}</span></a>

@@ -35,13 +35,13 @@

{% if current_user.is_authenticated or not has_password %}

{% if not current_diff_url %}

<li class="pure-menu-item">

- <a href="/backup" class="pure-menu-link">BACKUP</a>

+ <a href="{{ url_for('get_backup')}}" class="pure-menu-link">BACKUP</a>

</li>

<li class="pure-menu-item">

- <a href="/import" class="pure-menu-link">IMPORT</a>

+ <a href="{{ url_for('import_page')}}" class="pure-menu-link">IMPORT</a>

</li>

<li class="pure-menu-item">

- <a href="/settings" class="pure-menu-link">SETTINGS</a>

+ <a href="{{ url_for('settings_page')}}" class="pure-menu-link">SETTINGS</a>

</li>

{% else %}

<li class="pure-menu-item">

@@ -55,7 +55,7 @@

{% endif %}

{% if current_user.is_authenticated %}

- <li class="pure-menu-item"><a href="/logout" class="pure-menu-link">LOG OUT</a></li>

+ <li class="pure-menu-item"><a href="{{url_for('logout')}}" class="pure-menu-link">LOG OUT</a></li>

{% endif %}

<li class="pure-menu-item"><a class="github-link" href="https://github.com/dgtlmoon/changedetection.io">

<svg class="octicon octicon-mark-github v-align-middle" height="32" viewBox="0 0 16 16"

diff --git a/backend/templates/edit.html b/backend/templates/edit.html

index 7212c087d65..3c57c55b77d 100644

--- a/backend/templates/edit.html

+++ b/backend/templates/edit.html

@@ -67,14 +67,13 @@

</div>

<br/>

<div class="pure-control-group">

- <a href="/" class="pure-button button-small button-cancel">Cancel</a>

- <a href="/api/delete?uuid={{uuid}}"

+ <a href="{{url_for('index')}}" class="pure-button button-small button-cancel">Cancel</a>

+ <a href="{{url_for('api_delete', uuid=uuid)}}"

class="pure-button button-small button-error ">Delete</a>

</div>

</fieldset>

</form>

-

</div>

{% endblock %}

diff --git a/backend/templates/scrub.html b/backend/templates/scrub.html

index 4798bf57ecd..557095f0f13 100644

--- a/backend/templates/scrub.html

+++ b/backend/templates/scrub.html

@@ -26,7 +26,7 @@

</div>

<br/>

<div class="pure-control-group">

- <a href="/" class="pure-button button-small button-cancel">Cancel</a>

+ <a href="{{url_for('index')}}" class="pure-button button-small button-cancel">Cancel</a>

</div>

</fieldset>

</form>

diff --git a/backend/templates/settings.html b/backend/templates/settings.html

index a17ba2c7804..48733cf7655 100644

--- a/backend/templates/settings.html

+++ b/backend/templates/settings.html

@@ -12,7 +12,7 @@

</div>

<div class="pure-control-group">

{% if current_user.is_authenticated %}

- <a href="{{url_for('settings_page', removepassword='yes')}}" class="pure-button pure-button-primary">Remove password</a>

+ <a href="{{url_for('settings_page', removepassword='yes')}}" class="pure-button pure-button-primary">Remove password</a>

{% else %}

{{ render_field(form.password, size=10) }}

<span class="pure-form-message-inline">Password protection for your changedetection.io application.</span>

@@ -97,11 +97,9 @@

</div>

<br/>

<div class="pure-control-group">

- <a href="/" class="pure-button button-small button-cancel">Back</a>

- <a href="/scrub" class="pure-button button-small button-cancel">Delete History Snapshot Data</a>

+ <a href="{{url_for('index')}}" class="pure-button button-small button-cancel">Back</a>

+ <a href="{{url_for('scrub_page')}}" class="pure-button button-small button-cancel">Delete History Snapshot Data</a>

</div>

-

-

</fieldset>

</form>

diff --git a/backend/templates/watch-overview.html b/backend/templates/watch-overview.html

index 11d3776a8d7..883d96f2153 100644

--- a/backend/templates/watch-overview.html

+++ b/backend/templates/watch-overview.html

@@ -15,10 +15,10 @@

<!-- user/pass r = requests.get('https://api.github.com/user', auth=('user', 'pass')) -->

</form>

<div>

- <a href="/" class="pure-button button-tag {{'active' if not active_tag }}">All</a>

+ <a href="{{url_for('index')}}" class="pure-button button-tag {{'active' if not active_tag }}">All</a>

{% for tag in tags %}

{% if tag != "" %}

- <a href="/?tag={{ tag}}" class="pure-button button-tag {{'active' if active_tag == tag }}">{{ tag }}</a>

+ <a href="{{url_for('index', tag=tag) }}" class="pure-button button-tag {{'active' if active_tag == tag }}">{{ tag }}</a>

{% endif %}

{% endfor %}

</div>

@@ -45,7 +45,8 @@

{% if watch.paused is defined and watch.paused != False %}paused{% endif %}

{% if watch.newest_history_key| int > watch.last_viewed| int %}unviewed{% endif %}">

<td class="inline">{{ loop.index }}</td>

- <td class="inline paused-state state-{{watch.paused}}"><a href="/?pause={{ watch.uuid}}{% if active_tag %}&tag={{active_tag}}{% endif %}"><img src="/static/images/pause.svg" alt="Pause"/></a></td>

+ <td class="inline paused-state state-{{watch.paused}}"><a href="{{url_for('index', pause=watch.uuid, tag=active_tag)}}"><img src="{{url_for('static_content', group='images', filename='pause.svg')}}" alt="Pause"/></a></td>

+

<td class="title-col inline">{{watch.title if watch.title is not none and watch.title|length > 0 else watch.url}}

<a class="external" target="_blank" rel="noopener" href="{{ watch.url }}"></a>

{% if watch.last_error is defined and watch.last_error != False %}

@@ -63,14 +64,14 @@

{% endif %}

</td>

<td>

- <a href="/api/checknow?uuid={{ watch.uuid}}{% if request.args.get('tag') %}&tag={{request.args.get('tag')}}{% endif %}"

+ <a href="{{ url_for('api_watch_checknow', uuid=watch.uuid, tag=request.args.get('tag')) }}"

class="pure-button button-small pure-button-primary">Recheck</a>

- <a href="/edit/{{ watch.uuid}}" class="pure-button button-small pure-button-primary">Edit</a>

+ <a href="{{ url_for('edit_page', uuid=watch.uuid)}}" class="pure-button button-small pure-button-primary">Edit</a>

{% if watch.history|length >= 2 %}

- <a href="/diff/{{ watch.uuid}}" target="{{watch.uuid}}" class="pure-button button-small pure-button-primary">Diff</a>

+ <a href="{{ url_for('diff_history_page', uuid=watch.uuid) }}" target="{{watch.uuid}}" class="pure-button button-small pure-button-primary">Diff</a>

{% else %}

{% if watch.history|length == 1 %}

- <a href="/preview/{{ watch.uuid}}" target="{{watch.uuid}}" class="pure-button button-small pure-button-primary">Preview</a>

+ <a href="{{ url_for('preview_page', uuid=watch.uuid)}}" target="{{watch.uuid}}" class="pure-button button-small pure-button-primary">Preview</a>

{% endif %}

{% endif %}

</td>

@@ -81,15 +82,15 @@

<ul id="post-list-buttons">

{% if has_unviewed %}

<li>

- <a href="/api/mark-all-viewed" class="pure-button button-tag ">Mark all viewed</a>

+ <a href="{{url_for('mark_all_viewed', tag=request.args.get('tag')) }}" class="pure-button button-tag ">Mark all viewed</a>

</li>

{% endif %}

<li>

- <a href="/api/checknow{% if active_tag%}?tag={{active_tag}}{%endif%}" class="pure-button button-tag ">Recheck

+ <a href="{{ url_for('api_watch_checknow', tag=active_tag) }}" class="pure-button button-tag ">Recheck

all {% if active_tag%}in "{{active_tag}}"{%endif%}</a>

</li>

<li>

- <a href="{{ url_for('index', tag=active_tag , rss=true)}}"><img id="feed-icon" src="/static/images/Generic_Feed-icon.svg" height="15px"></a>

+ <a href="{{ url_for('index', tag=active_tag , rss=true)}}"><img id="feed-icon" src="{{url_for('static_content', group='images', filename='Generic_Feed-icon.svg')}}" height="15px"></a>

</li>

</ul>

</div>

diff --git a/changedetection.py b/changedetection.py

index bd959dc3f3d..c6461b62276 100755

--- a/changedetection.py

+++ b/changedetection.py

@@ -65,6 +65,18 @@ def inject_version():

has_password=datastore.data['settings']['application']['password'] != False

)

+ # Proxy sub-directory support

+ # Set environment var USE_X_SETTINGS=1 on this script

+ # And then in your proxy_pass settings

+ #

+ # proxy_set_header Host "localhost";

+ # proxy_set_header X-Forwarded-Prefix /app;

+

+ if os.getenv('USE_X_SETTINGS'):

+ print ("USE_X_SETTINGS is ENABLED\n")

+ from werkzeug.middleware.proxy_fix import ProxyFix

+ app.wsgi_app = ProxyFix(app.wsgi_app, x_prefix=1, x_host=1)

+

if ssl_mode:

# @todo finalise SSL config, but this should get you in the right direction if you need it.

eventlet.wsgi.server(eventlet.wrap_ssl(eventlet.listen(('', port)),

diff --git a/docker-compose.yml b/docker-compose.yml

index bb806657d5e..7000de353c2 100644

--- a/docker-compose.yml

+++ b/docker-compose.yml

@@ -17,8 +17,14 @@ services:

# Base URL of your changedetection.io install (Added to notification alert

# - BASE_URL="https://mysite.com"

+ # Respect proxy_pass type settings, `proxy_set_header Host "localhost";` and `proxy_set_header X-Forwarded-Prefix /app;`

+ # More here https://github.com/dgtlmoon/changedetection.io/wiki/Running-changedetection.io-behind-a-reverse-proxy-sub-directory

+ # - USE_X_SETTINGS=1

+

+ # Comment out ports: when using behind a reverse proxy , enable networks: etc.

ports:

- 5000:5000

+

restart: always

volumes:

|

diff --git a/backend/tests/test_access_control.py b/backend/tests/test_access_control.py

index f8cb5be313a..14e0880a7d5 100644

--- a/backend/tests/test_access_control.py

+++ b/backend/tests/test_access_control.py

@@ -46,7 +46,7 @@ def test_check_access_control(app, client):

assert b"LOG OUT" in res.data

# Now remove the password so other tests function, @todo this should happen before each test automatically

- res = c.get(url_for("settings_page", removepassword="true"),

+ res = c.get(url_for("settings_page", removepassword="yes"),

follow_redirects=True)

assert b"Password protection removed." in res.data

@@ -93,7 +93,7 @@ def test_check_access_no_remote_access_to_remove_password(app, client):

assert b"Password protection enabled." in res.data

assert b"Login" in res.data

- res = c.get(url_for("settings_page", removepassword="true"),

+ res = c.get(url_for("settings_page", removepassword="yes"),

follow_redirects=True)

assert b"Password protection removed." not in res.data

|

[Feature] Add URL Base Subfolder Option

My changedetection.io server is behind a reverse proxy and I use individual folders per site. I see a new URL base path option for just notifications but no way to specify subfolders for the site.

Ex: domain.com/changedetection.io/

|

@aaronrunkle I had a bit of a dig around but couldnt figure it out, I think its because I'm using the eventlet wrapper, it does not pickup the script_path properly... other wrappers like `gunicorn` i think will do it better... or `uwsgi` unsure.. help welcome

so `http://127.0.0.1/app` loads but, the links in the watch-table (and styles) dont see the `/app` ...

two good resources

https://www.digitalocean.com/community/tutorials/how-to-serve-flask-applications-with-uswgi-and-nginx-on-ubuntu-18-04

https://github.com/tiangolo/uwsgi-nginx-flask-docker/tree/master/example-flask-python3.8/app

|

@aaronrunkle I had a bit of a dig around but couldnt figure it out, I think its because I'm using the eventlet wrapper, it does not pickup the script_path properly... other wrappers like `gunicorn` i think will do it better... or `uwsgi` unsure.. help welcome

so `http://127.0.0.1/app` loads but, the links in the watch-table (and styles) dont see the `/app` ...

two good resources

https://www.digitalocean.com/community/tutorials/how-to-serve-flask-applications-with-uswgi-and-nginx-on-ubuntu-18-04

https://github.com/tiangolo/uwsgi-nginx-flask-docker/tree/master/example-flask-python3.8/app

From https://nickjanetakis.com/blog/best-practices-around-production-ready-web-apps-with-docker-compose

Also.. need to change to

```

# Good (preferred).

CMD ["gunicorn", "-c", "python:config.gunicorn", "hello.app:create_app()"]

```

@dgtlmoon Thanks!

Looks like it's mostly working for me. Three issues I've found thus far -

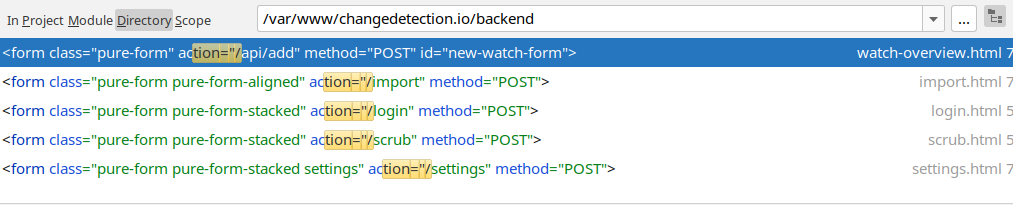

The save button doesn't seem to redirect properly. Looks like /settings is hardcoded here:

https://github.com/dgtlmoon/changedetection.io/blob/master/backend/templates/settings.html#L7

Same with /login when a password is set: https://github.com/dgtlmoon/changedetection.io/blob/master/backend/templates/login.html#L5

Also cannot add new watched items (/api/add):

https://github.com/dgtlmoon/changedetection.io/blob/master/backend/templates/watch-overview.html#L7

@aaronrunkle thanks for the feedback! just pushed a commit now, give it 30min~ to rebuild

Working great so far; thank you!

@dgtlmoon Actually found one issue - the diff.js link needs to be updated as well:

https://github.com/dgtlmoon/changedetection.io/blob/cd622261e9fb925460832b2d2cea4220130e6d6b/backend/templates/diff.html#L55

@dgtlmoon

Some more

https://github.com/dgtlmoon/changedetection.io/blob/master/backend/static/styles/styles.css#L96

https://github.com/dgtlmoon/changedetection.io/blob/master/backend/static/styles/styles.scss#L127

@aaronrunkle hmmm how to solve that one?

@dgtlmoon You can use relative URL's in the CSS URL function, like `../images/gradient-border.png`

@deakster wont work in the case that the page is `/edit/foobar` or `/confirm/delete/watch` or something, must be a smarter way, see my link there, using a regex in the route

https://github.com/dgtlmoon/changedetection.io/pull/141#issuecomment-882038304

It might be considered a hack, but using a parent-path (`../`) as in my PR #272 for gradient-border.png works fine. For the docker implementations of this project, that appears valid (ie parent paths are not disallowed in the web server). For personal implementations in other web engines, they would need to allow parent paths if they are disallowed by default, or had been explicitly disallowed by the admin. If an admin had gone to the trouble of disabling parent paths, I would like to think that they also understand how to rewrite html and turn the path back into a valid one for their specific situation.

My knowledge of web servers is not strong, so parent paths may not be an issue if the url does not go above the root.

AFAIK you dont specify the sub-folder when your server is configured correctly, it will automatically learn it

for example here https://github.com/dgtlmoon/changedetection.io/wiki/Running-changedetection.io-behind-a-reverse-proxy-sub-directory

if you are running the application at `http://myserver.com/app` for example.. I think this resolves it.. if not.. please open a new issue with the individual issues

Yes, the local (to the changedetection.io instance) web server sort of auto-learns the subfolder - I suspect this is a feature of the framework you are using. It makes implementation (for the user) very simple, the only downside is that for any elements that need absolute paths specified for some reason, you have no awareness of the correct full path and run into issues like with gradient-border.png.

Could you detect the `X-Forwarded-Prefix` header and use it to pre-pend on elements? That would be a cleaner solution than my `../` hack.

@jeremysherriff can you tell me which elements arent functioning still? there's better ways in Flask to handle it (you can set a special regex handler for the request path for example)

@dgtlmoon as per my PR #272 there's only two that I have found;

- gradient-border.png as specified in styles.css, and

- Google-Chrome-icon.png in watch-overview.html

The edits in that PR cater for both reverse-proxy+subfolder and standard http://ip:port implementations.

Note that the reverse-proxy configuration needs a minor tweak, as per my comments in issue #273 (relates specifically to the edit for styles.css). Without this, people still wouldn't see the border but that's the only effect.

Can you use a regex handler in Flask to resolve those static components?

| 2021-06-16T12:57:18

|

/net/extradisks0/srv/nfs/guneshwors-extra-data/raw_tasks_with_metadata_restructured_mini-50-5-5.jsonl

| 3

|

[

"test1"

] |

[

"test2"

] |

v1.0.0

|

addsdbcarewfdsvsad

|

deepset-ai/haystack

| 3,445

|

deepset-ai__haystack-3445

|

[

3056

] |

df4d20d32ce77f92456ab23e3f7c69ed59ad7b3b

|

diff --git a/docs/_src/api/api/file_converter.md b/docs/_src/api/api/file_converter.md

index 8fd6a86c3e..e954390de1 100644

--- a/docs/_src/api/api/file_converter.md

+++ b/docs/_src/api/api/file_converter.md

@@ -17,10 +17,7 @@ Base class for implementing file converts to transform input documents to text f

#### BaseConverter.\_\_init\_\_

```python

-def __init__(remove_numeric_tables: bool = False,

- valid_languages: Optional[List[str]] = None,

- id_hash_keys: Optional[List[str]] = None,

- progress_bar: bool = True)

+def __init__(remove_numeric_tables: bool = False, valid_languages: Optional[List[str]] = None, id_hash_keys: Optional[List[str]] = None, progress_bar: bool = True)

```

**Arguments**:

@@ -47,12 +44,7 @@ In this case the id will be generated by using the content and the defined metad

```python

@abstractmethod

-def convert(file_path: Path,

- meta: Optional[Dict[str, Any]],

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = "UTF-8",

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, Any]], remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = "UTF-8", id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Convert a file to a dictionary containing the text and any associated meta data.

@@ -85,8 +77,7 @@ In this case the id will be generated by using the content and the defined metad

#### BaseConverter.validate\_language

```python

-def validate_language(text: str,

- valid_languages: Optional[List[str]] = None) -> bool

+def validate_language(text: str, valid_languages: Optional[List[str]] = None) -> bool

```

Validate if the language of the text is one of valid languages.

@@ -96,14 +87,7 @@ Validate if the language of the text is one of valid languages.

#### BaseConverter.run

```python

-def run(file_paths: Union[Path, List[Path]],

- meta: Optional[Union[Dict[str, str],

- List[Optional[Dict[str, str]]]]] = None,

- remove_numeric_tables: Optional[bool] = None,

- known_ligatures: Dict[str, str] = KNOWN_LIGATURES,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = "UTF-8",

- id_hash_keys: Optional[List[str]] = None)

+def run(file_paths: Union[Path, List[Path]], meta: Optional[Union[Dict[str, str], List[Optional[Dict[str, str]]]]] = None, remove_numeric_tables: Optional[bool] = None, known_ligatures: Dict[str, str] = KNOWN_LIGATURES, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = "UTF-8", id_hash_keys: Optional[List[str]] = None)

```

Extract text from a file.

@@ -153,12 +137,7 @@ class DocxToTextConverter(BaseConverter)

#### DocxToTextConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, str]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = None,

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = None, id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Extract text from a .docx file.

@@ -203,9 +182,7 @@ class ImageToTextConverter(BaseConverter)

#### ImageToTextConverter.\_\_init\_\_

```python

-def __init__(remove_numeric_tables: bool = False,

- valid_languages: Optional[List[str]] = ["eng"],

- id_hash_keys: Optional[List[str]] = None)

+def __init__(remove_numeric_tables: bool = False, valid_languages: Optional[List[str]] = ["eng"], id_hash_keys: Optional[List[str]] = None)

```

**Arguments**:

@@ -232,12 +209,7 @@ In this case the id will be generated by using the content and the defined metad

#### ImageToTextConverter.convert

```python

-def convert(file_path: Union[Path, str],

- meta: Optional[Dict[str, str]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = None,

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Union[Path, str], meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = None, id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Extract text from image file using the pytesseract library (https://github.com/madmaze/pytesseract)

@@ -275,47 +247,49 @@ In this case the id will be generated by using the content and the defined metad

class MarkdownConverter(BaseConverter)

```

-<a id="markdown.MarkdownConverter.convert"></a>

+<a id="markdown.MarkdownConverter.__init__"></a>

-#### MarkdownConverter.convert

+#### MarkdownConverter.\_\_init\_\_

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, str]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = "utf-8",

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def __init__(remove_numeric_tables: bool = False, valid_languages: Optional[List[str]] = None, id_hash_keys: Optional[List[str]] = None, progress_bar: bool = True, remove_code_snippets: bool = True, extract_headlines: bool = False)

```

-Reads text from a markdown file and executes optional preprocessing steps.

-

**Arguments**:

-- `file_path`: path of the file to convert

-- `meta`: dictionary of meta data key-value pairs to append in the returned document.

-- `encoding`: Select the file encoding (default is `utf-8`)

-- `remove_numeric_tables`: Not applicable

-- `valid_languages`: Not applicable

+- `remove_numeric_tables`: Not applicable.

+- `valid_languages`: Not applicable.

- `id_hash_keys`: Generate the document id from a custom list of strings that refer to the document's

attributes. If you want to ensure you don't have duplicate documents in your DocumentStore but texts are

not unique, you can modify the metadata and pass e.g. `"meta"` to this field (e.g. [`"content"`, `"meta"`]).

In this case the id will be generated by using the content and the defined metadata.

+- `progress_bar`: Show a progress bar for the conversion.

+- `remove_code_snippets`: Whether to remove snippets from the markdown file.

+- `extract_headlines`: Whether to extract headings from the markdown file.

-<a id="markdown.MarkdownConverter.markdown_to_text"></a>

+<a id="markdown.MarkdownConverter.convert"></a>

-#### MarkdownConverter.markdown\_to\_text

+#### MarkdownConverter.convert

```python

-@staticmethod

-def markdown_to_text(markdown_string: str) -> str

+def convert(file_path: Path, meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = "utf-8", id_hash_keys: Optional[List[str]] = None, remove_code_snippets: Optional[bool] = None, extract_headlines: Optional[bool] = None) -> List[Document]

```

-Converts a markdown string to plaintext

+Reads text from a txt file and executes optional preprocessing steps.

**Arguments**:

-- `markdown_string`: String in markdown format

+- `file_path`: path of the file to convert

+- `meta`: dictionary of meta data key-value pairs to append in the returned document.

+- `encoding`: Select the file encoding (default is `utf-8`)

+- `remove_numeric_tables`: Not applicable

+- `valid_languages`: Not applicable

+- `id_hash_keys`: Generate the document id from a custom list of strings that refer to the document's

+attributes. If you want to ensure you don't have duplicate documents in your DocumentStore but texts are

+not unique, you can modify the metadata and pass e.g. `"meta"` to this field (e.g. [`"content"`, `"meta"`]).

+In this case the id will be generated by using the content and the defined metadata.

+- `remove_code_snippets`: Whether to remove snippets from the markdown file.

+- `extract_headlines`: Whether to extract headings from the markdown file.

<a id="pdf"></a>

@@ -334,11 +308,7 @@ class PDFToTextConverter(BaseConverter)

#### PDFToTextConverter.\_\_init\_\_

```python

-def __init__(remove_numeric_tables: bool = False,

- valid_languages: Optional[List[str]] = None,

- id_hash_keys: Optional[List[str]] = None,

- encoding: Optional[str] = "UTF-8",

- keep_physical_layout: bool = False)

+def __init__(remove_numeric_tables: bool = False, valid_languages: Optional[List[str]] = None, id_hash_keys: Optional[List[str]] = None, encoding: Optional[str] = "UTF-8")

```

**Arguments**:

@@ -360,20 +330,13 @@ In this case the id will be generated by using the content and the defined metad

- `encoding`: Encoding that will be passed as `-enc` parameter to `pdftotext`.

Defaults to "UTF-8" in order to support special characters (e.g. German Umlauts, Cyrillic ...).

(See list of available encodings, such as "Latin1", by running `pdftotext -listenc` in the terminal)

-- `keep_physical_layout`: This option will maintain original physical layout on the extracted text.

-It works by passing the `-layout` parameter to `pdftotext`. When disabled, PDF is read in the stream order.

<a id="pdf.PDFToTextConverter.convert"></a>

#### PDFToTextConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, Any]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = None,

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = None, id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Extract text from a .pdf file using the pdftotext library (https://www.xpdfreader.com/pdftotext-man.html)

@@ -395,8 +358,6 @@ not one of the valid languages, then it might likely be encoding error resulting

in garbled text.

- `encoding`: Encoding that overwrites self.encoding and will be passed as `-enc` parameter to `pdftotext`.

(See list of available encodings by running `pdftotext -listenc` in the terminal)

-- `keep_physical_layout`: This option will maintain original physical layout on the extracted text.

-It works by passing the `-layout` parameter to `pdftotext`. When disabled, PDF is read in the stream order.

- `id_hash_keys`: Generate the document id from a custom list of strings that refer to the document's

attributes. If you want to ensure you don't have duplicate documents in your DocumentStore but texts are

not unique, you can modify the metadata and pass e.g. `"meta"` to this field (e.g. [`"content"`, `"meta"`]).

@@ -415,9 +376,7 @@ class PDFToTextOCRConverter(BaseConverter)

#### PDFToTextOCRConverter.\_\_init\_\_

```python

-def __init__(remove_numeric_tables: bool = False,

- valid_languages: Optional[List[str]] = ["eng"],

- id_hash_keys: Optional[List[str]] = None)

+def __init__(remove_numeric_tables: bool = False, valid_languages: Optional[List[str]] = ["eng"], id_hash_keys: Optional[List[str]] = None)

```

Extract text from image file using the pytesseract library (https://github.com/madmaze/pytesseract)

@@ -444,12 +403,7 @@ In this case the id will be generated by using the content and the defined metad

#### PDFToTextOCRConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, Any]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = None,

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = None, id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Convert a file to a dictionary containing the text and any associated meta data.

@@ -499,17 +453,7 @@ Supported file formats are: PDF, DOCX

#### ParsrConverter.\_\_init\_\_

```python

-def __init__(parsr_url: str = "http://localhost:3001",

- extractor: Literal["pdfminer", "pdfjs"] = "pdfminer",

- table_detection_mode: Literal["lattice", "stream"] = "lattice",

- preceding_context_len: int = 3,

- following_context_len: int = 3,

- remove_page_headers: bool = False,

- remove_page_footers: bool = False,

- remove_table_of_contents: bool = False,

- valid_languages: Optional[List[str]] = None,

- id_hash_keys: Optional[List[str]] = None,

- add_page_number: bool = True)

+def __init__(parsr_url: str = "http://localhost:3001", extractor: Literal["pdfminer", "pdfjs"] = "pdfminer", table_detection_mode: Literal["lattice", "stream"] = "lattice", preceding_context_len: int = 3, following_context_len: int = 3, remove_page_headers: bool = False, remove_page_footers: bool = False, remove_table_of_contents: bool = False, valid_languages: Optional[List[str]] = None, id_hash_keys: Optional[List[str]] = None, add_page_number: bool = True)

```

**Arguments**:

@@ -543,12 +487,7 @@ In this case the id will be generated by using the content and the defined metad

#### ParsrConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, Any]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = "utf-8",

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, Any]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = "utf-8", id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Extract text and tables from a PDF or DOCX using the open-source Parsr tool.

@@ -597,16 +536,7 @@ https://docs.microsoft.com/en-us/azure/applied-ai-services/form-recognizer/quick

#### AzureConverter.\_\_init\_\_

```python

-def __init__(endpoint: str,

- credential_key: str,

- model_id: str = "prebuilt-document",

- valid_languages: Optional[List[str]] = None,

- save_json: bool = False,

- preceding_context_len: int = 3,

- following_context_len: int = 3,

- merge_multiple_column_headers: bool = True,

- id_hash_keys: Optional[List[str]] = None,

- add_page_number: bool = True)

+def __init__(endpoint: str, credential_key: str, model_id: str = "prebuilt-document", valid_languages: Optional[List[str]] = None, save_json: bool = False, preceding_context_len: int = 3, following_context_len: int = 3, merge_multiple_column_headers: bool = True, id_hash_keys: Optional[List[str]] = None, add_page_number: bool = True)

```

**Arguments**:

@@ -641,14 +571,7 @@ In this case the id will be generated by using the content and the defined metad

#### AzureConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, Any]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = "utf-8",

- id_hash_keys: Optional[List[str]] = None,

- pages: Optional[str] = None,

- known_language: Optional[str] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, Any]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = "utf-8", id_hash_keys: Optional[List[str]] = None, pages: Optional[str] = None, known_language: Optional[str] = None) -> List[Document]

```

Extract text and tables from a PDF, JPEG, PNG, BMP or TIFF file using Azure's Form Recognizer service.

@@ -680,11 +603,7 @@ See supported locales here: https://aka.ms/azsdk/formrecognizer/supportedlocales

#### AzureConverter.convert\_azure\_json

```python

-def convert_azure_json(

- file_path: Path,

- meta: Optional[Dict[str, Any]] = None,

- valid_languages: Optional[List[str]] = None,

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert_azure_json(file_path: Path, meta: Optional[Dict[str, Any]] = None, valid_languages: Optional[List[str]] = None, id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Extract text and tables from the JSON output of Azure's Form Recognizer service.

@@ -721,10 +640,7 @@ class TikaConverter(BaseConverter)

#### TikaConverter.\_\_init\_\_

```python

-def __init__(tika_url: str = "http://localhost:9998/tika",

- remove_numeric_tables: bool = False,

- valid_languages: Optional[List[str]] = None,

- id_hash_keys: Optional[List[str]] = None)

+def __init__(tika_url: str = "http://localhost:9998/tika", remove_numeric_tables: bool = False, valid_languages: Optional[List[str]] = None, id_hash_keys: Optional[List[str]] = None)

```

**Arguments**:

@@ -750,12 +666,7 @@ In this case the id will be generated by using the content and the defined metad

#### TikaConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, str]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = None,

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = None, id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

**Arguments**:

@@ -799,12 +710,7 @@ class TextConverter(BaseConverter)

#### TextConverter.convert

```python

-def convert(file_path: Path,

- meta: Optional[Dict[str, str]] = None,

- remove_numeric_tables: Optional[bool] = None,

- valid_languages: Optional[List[str]] = None,

- encoding: Optional[str] = "utf-8",

- id_hash_keys: Optional[List[str]] = None) -> List[Document]

+def convert(file_path: Path, meta: Optional[Dict[str, str]] = None, remove_numeric_tables: Optional[bool] = None, valid_languages: Optional[List[str]] = None, encoding: Optional[str] = "utf-8", id_hash_keys: Optional[List[str]] = None) -> List[Document]

```

Reads text from a txt file and executes optional preprocessing steps.

diff --git a/haystack/json-schemas/haystack-pipeline-1.11.0rc0.schema.json b/haystack/json-schemas/haystack-pipeline-1.11.0rc0.schema.json

index 5411f0e63b..ce36bc76bf 100644

--- a/haystack/json-schemas/haystack-pipeline-1.11.0rc0.schema.json

+++ b/haystack/json-schemas/haystack-pipeline-1.11.0rc0.schema.json

@@ -2421,6 +2421,11 @@

"additionalProperties": false,

"description": "Each parameter can reference other components defined in the same YAML file.",

"properties": {

+ "extract_headlines": {

+ "default": false,

+ "title": "Extract Headlines",

+ "type": "boolean"

+ },

"id_hash_keys": {

"anyOf": [

{

@@ -2440,6 +2445,11 @@

"title": "Progress Bar",

"type": "boolean"

},

+ "remove_code_snippets": {

+ "default": true,

+ "title": "Remove Code Snippets",

+ "type": "boolean"

+ },

"remove_numeric_tables": {

"default": false,

"title": "Remove Numeric Tables",

diff --git a/haystack/json-schemas/haystack-pipeline-main.schema.json b/haystack/json-schemas/haystack-pipeline-main.schema.json

index a286d64d16..d36511a08b 100644

--- a/haystack/json-schemas/haystack-pipeline-main.schema.json

+++ b/haystack/json-schemas/haystack-pipeline-main.schema.json

@@ -2421,6 +2421,11 @@

"additionalProperties": false,

"description": "Each parameter can reference other components defined in the same YAML file.",

"properties": {

+ "extract_headlines": {

+ "default": false,

+ "title": "Extract Headlines",

+ "type": "boolean"

+ },

"id_hash_keys": {

"anyOf": [

{

@@ -2440,6 +2445,11 @@

"title": "Progress Bar",

"type": "boolean"

},

+ "remove_code_snippets": {

+ "default": true,

+ "title": "Remove Code Snippets",

+ "type": "boolean"

+ },

"remove_numeric_tables": {

"default": false,

"title": "Remove Numeric Tables",

diff --git a/haystack/nodes/file_converter/markdown.py b/haystack/nodes/file_converter/markdown.py

index e539bac789..7c5af831c9 100644

--- a/haystack/nodes/file_converter/markdown.py

+++ b/haystack/nodes/file_converter/markdown.py

@@ -1,10 +1,10 @@

import logging

import re

from pathlib import Path

-from typing import Dict, List, Optional

+from typing import Dict, List, Optional, Tuple, Any

try:

- from bs4 import BeautifulSoup

+ from bs4 import BeautifulSoup, NavigableString

from markdown import markdown

except (ImportError, ModuleNotFoundError) as ie:

from haystack.utils.import_utils import _optional_component_not_installed

@@ -19,14 +19,46 @@

class MarkdownConverter(BaseConverter):

+ def __init__(

+ self,

+ remove_numeric_tables: bool = False,

+ valid_languages: Optional[List[str]] = None,

+ id_hash_keys: Optional[List[str]] = None,

+ progress_bar: bool = True,

+ remove_code_snippets: bool = True,

+ extract_headlines: bool = False,

+ ):

+ """

+ :param remove_numeric_tables: Not applicable.

+ :param valid_languages: Not applicable.

+ :param id_hash_keys: Generate the document ID from a custom list of strings that refer to the document's

+ attributes. To make sure you don't have duplicate documents in your DocumentStore if texts are

+ not unique, you can modify the metadata and pass for example, `"meta"` to this field ([`"content"`, `"meta"`]).

+ In this case, the ID is generated by using the content and the defined metadata.

+ :param progress_bar: Show a progress bar for the conversion.

+ :param remove_code_snippets: Whether to remove snippets from the markdown file.

+ :param extract_headlines: Whether to extract headings from the markdown file.

+ """

+ super().__init__(

+ remove_numeric_tables=remove_numeric_tables,

+ valid_languages=valid_languages,

+ id_hash_keys=id_hash_keys,

+ progress_bar=progress_bar,

+ )

+

+ self.remove_code_snippets = remove_code_snippets

+ self.extract_headlines = extract_headlines

+

def convert(

self,

file_path: Path,

- meta: Optional[Dict[str, str]] = None,

+ meta: Optional[Dict[str, Any]] = None,

remove_numeric_tables: Optional[bool] = None,

valid_languages: Optional[List[str]] = None,

encoding: Optional[str] = "utf-8",

id_hash_keys: Optional[List[str]] = None,

+ remove_code_snippets: Optional[bool] = None,

+ extract_headlines: Optional[bool] = None,

) -> List[Document]:

"""

Reads text from a markdown file and executes optional preprocessing steps.

@@ -40,32 +72,53 @@ def convert(

attributes. If you want to ensure you don't have duplicate documents in your DocumentStore but texts are

not unique, you can modify the metadata and pass e.g. `"meta"` to this field (e.g. [`"content"`, `"meta"`]).

In this case the id will be generated by using the content and the defined metadata.

+ :param remove_code_snippets: Whether to remove snippets from the markdown file.

+ :param extract_headlines: Whether to extract headings from the markdown file.

"""

- if id_hash_keys is None:

- id_hash_keys = self.id_hash_keys

+

+ id_hash_keys = id_hash_keys if id_hash_keys is not None else self.id_hash_keys

+ remove_code_snippets = remove_code_snippets if remove_code_snippets is not None else self.remove_code_snippets

+ extract_headlines = extract_headlines if extract_headlines is not None else self.extract_headlines

+

with open(file_path, encoding=encoding, errors="ignore") as f:

markdown_text = f.read()

- text = self.markdown_to_text(markdown_text)

+

+ # md -> html -> text since BeautifulSoup can extract text cleanly

+ html = markdown(markdown_text)

+

+ # remove code snippets

+ if remove_code_snippets:

+ html = re.sub(r"<pre>(.*?)</pre>", " ", html, flags=re.DOTALL)

+ html = re.sub(r"<code>(.*?)</code>", " ", html, flags=re.DOTALL)

+ soup = BeautifulSoup(html, "html.parser")

+

+ if extract_headlines:

+ text, headlines = self._extract_text_and_headlines(soup)

+ if meta is None:

+ meta = {}

+ meta["headlines"] = headlines

+ else:

+ text = soup.get_text()

+

document = Document(content=text, meta=meta, id_hash_keys=id_hash_keys)

return [document]

- # Following code snippet is copied from https://gist.github.com/lorey/eb15a7f3338f959a78cc3661fbc255fe

@staticmethod

- def markdown_to_text(markdown_string: str) -> str:

+ def _extract_text_and_headlines(soup: BeautifulSoup) -> Tuple[str, List[Dict]]:

"""

- Converts a markdown string to plaintext

-

- :param markdown_string: String in markdown format

+ Extracts text and headings from a soup object.

"""

- # md -> html -> text since BeautifulSoup can extract text cleanly

- html = markdown(markdown_string)

+ headline_tags = {"h1", "h2", "h3", "h4", "h5", "h6"}

+ headlines = []

+ text = ""

+ for desc in soup.descendants:

+ if desc.name in headline_tags:

+ current_headline = desc.get_text()

+ current_start_idx = len(text)

+ current_level = int(desc.name[-1]) - 1

+ headlines.append({"headline": current_headline, "start_idx": current_start_idx, "level": current_level})

- # remove code snippets

- html = re.sub(r"<pre>(.*?)</pre>", " ", html)

- html = re.sub(r"<code>(.*?)</code >", " ", html)

-

- # extract text

- soup = BeautifulSoup(html, "html.parser")

- text = "".join(soup.findAll(text=True))

+ if isinstance(desc, NavigableString):

+ text += desc.get_text()

- return text

+ return text, headlines

diff --git a/haystack/nodes/preprocessor/preprocessor.py b/haystack/nodes/preprocessor/preprocessor.py

index 985cf640d4..fe69fb7db9 100644

--- a/haystack/nodes/preprocessor/preprocessor.py

+++ b/haystack/nodes/preprocessor/preprocessor.py

@@ -3,7 +3,7 @@

from copy import deepcopy

from functools import partial, reduce

from itertools import chain

-from typing import List, Optional, Generator, Set, Union

+from typing import List, Optional, Generator, Set, Union, Tuple, Dict

try:

from typing import Literal

@@ -47,8 +47,6 @@

"ml": "malayalam",

}

-EMPTY_PAGE_PLACEHOLDER = "@@@HAYSTACK_KEEP_PAGE@@@."

-

class PreProcessor(BasePreProcessor):

def __init__(

@@ -261,35 +259,22 @@ def clean(

text, n_chars=300, n_first_pages_to_ignore=1, n_last_pages_to_ignore=1

)

- if clean_whitespace:

- pages = text.split("\f")

- cleaned_pages = []

- for page in pages:

- if not page:

- # there are many "empty text" pages in a marketing document, as for example the cover page. If we just forget about them, we have a mismatch

- # with page numbers which causes problems later on. Therefore, we replace them with a dummy text, which will not be found by any query.

- cleaned_page = EMPTY_PAGE_PLACEHOLDER

- else:

- lines = page.splitlines()

- cleaned_lines = []

- for line in lines:

- line = line.strip()

- cleaned_lines.append(line)

- cleaned_page = "\n".join(cleaned_lines)

-

- cleaned_pages.append(cleaned_page)

+ headlines = document.meta["headlines"] if "headlines" in document.meta else []

- text = "\f".join(cleaned_pages)

+ if clean_whitespace:

+ text, headlines = self._clean_whitespace(text=text, headlines=headlines)

if clean_empty_lines:

- text = re.sub(r"\n\n+", "\n\n", text)

+ text, headlines = self._clean_empty_lines(text=text, headlines=headlines)

for substring in remove_substrings:

- text = text.replace(substring, "")

+ text, headline = self._remove_substring(text=text, substring=substring, headlines=headlines)

if text != document.content:

document = deepcopy(document)

document.content = text

+ if headlines:

+ document.meta["headlines"] = headlines

return document

@@ -328,131 +313,302 @@ def split(

return [document]

text = document.content

+ headlines = document.meta["headlines"] if "headlines" in document.meta else []

if split_respect_sentence_boundary and split_by == "word":

- # split by words ensuring no sub sentence splits

- if self.add_page_number:

- # SentenceTokenizer will remove "\f" if it is at the end of a sentence, so substituting it in these

- # cases for "[NEW_PAGE]" to don't lose any page breaks.

- text = self._substitute_page_breaks(text)

- sentences = self._split_sentences(text)

-

- word_count_slice = 0

- cur_page = 1

- splits_pages = []

- list_splits = []

- current_slice: List[str] = []

- for sen in sentences:

- if self.add_page_number and "[NEW_PAGE]" in sen:

- sen = sen.replace("[NEW_PAGE]", "\f")

-

- word_count_sen = len(sen.split(" "))

- if word_count_sen > split_length:

- long_sentence_message = f"One or more sentence found with word count higher than the split length."

- if long_sentence_message not in self.print_log:

- self.print_log.add(long_sentence_message)

- logger.warning(long_sentence_message)

- if word_count_slice + word_count_sen > split_length:

- # Number of words exceeds split_length -> save current slice and start a new one

- if current_slice:

- list_splits.append(current_slice)

- splits_pages.append(cur_page)

-

- if split_overlap:

- overlap = []

- processed_sents = []

- word_count_overlap = 0

- current_slice_copy = deepcopy(current_slice)

- for idx, s in reversed(list(enumerate(current_slice))):

- sen_len = len(s.split(" "))

- if word_count_overlap < split_overlap:

- overlap.append(s)

- word_count_overlap += sen_len

- current_slice_copy.pop(idx)

- else:

- processed_sents = current_slice_copy

- break

- current_slice = list(reversed(overlap))

- word_count_slice = word_count_overlap

- else:

- processed_sents = current_slice

- current_slice = []

- word_count_slice = 0

-

- # Count number of page breaks in processed sentences

- if self.add_page_number:

- num_page_breaks = self._count_processed_page_breaks(

- sentences=processed_sents,

- split_overlap=split_overlap,

- overlapping_sents=current_slice,

- current_sent=sen,

- )

- cur_page += num_page_breaks

-

- current_slice.append(sen)

- word_count_slice += word_count_sen

-

- if current_slice:

- list_splits.append(current_slice)

- splits_pages.append(cur_page)

-

- text_splits = []

- for sl in list_splits:

- txt = " ".join(sl)

- if len(txt) > 0:

- text_splits.append(txt)

+ text_splits, splits_pages, splits_start_idxs = self._split_by_word_respecting_sent_boundary(

+ text=text, split_length=split_length, split_overlap=split_overlap

+ )

else:

# create individual "elements" of passage, sentence, or word

- if split_by == "passage":

- elements = text.split("\n\n")

- elif split_by == "sentence":

- if self.add_page_number:

- # SentenceTokenizer will remove "\f" if it is at the end of a sentence, so substituting it in these

- # cases for "[NEW_PAGE]" to don't lose any page breaks.

- text = self._substitute_page_breaks(text)

- elements = self._split_sentences(text)

- elif split_by == "word":

- elements = text.split(" ")

- else:

- raise NotImplementedError(

- "PreProcessor only supports 'passage', 'sentence' or 'word' split_by options."

- )

+ elements, split_at = self._split_into_units(text=text, split_by=split_by)

# concatenate individual elements based on split_length & split_stride

- if split_overlap:

- segments = windowed(elements, n=split_length, step=split_length - split_overlap)

- else:

- segments = windowed(elements, n=split_length, step=split_length)

- text_splits = []

- splits_pages = []

- cur_page = 1

- for seg in segments:

- current_units = [unit for unit in seg if unit is not None]

- txt = " ".join(current_units)

- if len(txt) > 0:

- text_splits.append(txt)

- splits_pages.append(cur_page)

- if self.add_page_number:

- processed_units = current_units[: split_length - split_overlap]

- num_page_breaks = sum(processed_unit.count("\f") for processed_unit in processed_units)

- cur_page += num_page_breaks

+ text_splits, splits_pages, splits_start_idxs = self._concatenate_units(

+ elements=elements, split_length=split_length, split_overlap=split_overlap, split_at=split_at

+ )

# create new document dicts for each text split

+ documents = self._create_docs_from_splits(

+ text_splits=text_splits,

+ splits_pages=splits_pages,

+ splits_start_idxs=splits_start_idxs,

+ headlines=headlines,

+ meta=document.meta or {},

+ id_hash_keys=id_hash_keys,

+ )

+

+ return documents

+

+ @staticmethod

+ def _clean_whitespace(text: str, headlines: List[Dict]) -> Tuple[str, List[Dict]]:

+ """

+ Strips whitespaces before or after each line in the text.

+ """

+ pages = text.split("\f")

+ cleaned_pages = []

+ cur_headline_idx = 0

+ num_headlines = len(headlines)

+ cur_char_idx = 0

+ num_removed_chars_total = 0

+ for page in pages:

+ lines = page.splitlines()

+ cleaned_lines = []

+ for idx, line in enumerate(lines):

+ old_line_len = len(line)

+ cleaned_line = line.strip()

+ cleaned_line_len = len(cleaned_line)

+ cur_char_idx += old_line_len + 1 # add 1 for newline char

+ if old_line_len != cleaned_line_len:

+ num_removed_chars_current = old_line_len - cleaned_line_len

+ num_removed_chars_total += num_removed_chars_current

+ for headline_idx in range(cur_headline_idx, num_headlines):

+ if cur_char_idx - num_removed_chars_total <= headlines[headline_idx]["start_idx"]:

+ headlines[headline_idx]["start_idx"] -= num_removed_chars_current

+ else:

+ cur_headline_idx += 1

+

+ cleaned_lines.append(cleaned_line)

+ cleaned_page = "\n".join(cleaned_lines)

+ cleaned_pages.append(cleaned_page)

+

+ cleaned_text = "\f".join(cleaned_pages)

+ return cleaned_text, headlines

+

+ @staticmethod

+ def _clean_empty_lines(text: str, headlines: List[Dict]) -> Tuple[str, List[Dict]]:

+ if headlines:

+ num_headlines = len(headlines)

+ multiple_new_line_matches = re.finditer(r"\n\n\n+", text)

+ cur_headline_idx = 0

+ num_removed_chars_accumulated = 0

+ for match in multiple_new_line_matches:

+ num_removed_chars_current = match.end() - match.start() - 2

+ for headline_idx in range(cur_headline_idx, num_headlines):

+ if match.end() - num_removed_chars_accumulated <= headlines[headline_idx]["start_idx"]:

+ headlines[headline_idx]["start_idx"] -= num_removed_chars_current

+ else:

+ cur_headline_idx += 1

+ num_removed_chars_accumulated += num_removed_chars_current

+

+ cleaned_text = re.sub(r"\n\n\n+", "\n\n", text)

+ return cleaned_text, headlines

+

+ @staticmethod

+ def _remove_substring(text: str, substring: str, headlines: List[Dict]) -> Tuple[str, List[Dict]]:

+ if headlines:

+ num_headlines = len(headlines)

+ multiple_substring_matches = re.finditer(substring, text)

+ cur_headline_idx = 0

+ num_removed_chars_accumulated = 0

+ for match in multiple_substring_matches:

+ for headline_idx in range(cur_headline_idx, num_headlines):

+ if match.end() - num_removed_chars_accumulated <= headlines[headline_idx]["start_idx"]:

+ headlines[headline_idx]["start_idx"] -= len(substring)

+ else:

+ cur_headline_idx += 1

+ num_removed_chars_accumulated += len(substring)

+

+ cleaned_text = text.replace(substring, "")

+ return cleaned_text, headlines

+

+ def _split_by_word_respecting_sent_boundary(

+ self, text: str, split_length: int, split_overlap: int

+ ) -> Tuple[List[str], List[int], List[int]]:

+ """

+ Splits the text into parts of split_length words while respecting sentence boundaries.

+ """

+ sentences = self._split_sentences(text)

+

+ word_count_slice = 0

+ cur_page = 1

+ cur_start_idx = 0

+ splits_pages = []

+ list_splits = []

+ splits_start_idxs = []

+ current_slice: List[str] = []

+ for sen in sentences:

+ word_count_sen = len(sen.split())

+

+ if word_count_sen > split_length:

+ long_sentence_message = (

+ f"We found one or more sentences whose word count is higher than the split length."

+ )

+ if long_sentence_message not in self.print_log:

+ self.print_log.add(long_sentence_message)

+ logger.warning(long_sentence_message)

+

+ if word_count_slice + word_count_sen > split_length:

+ # Number of words exceeds split_length -> save current slice and start a new one

+ if current_slice:

+ list_splits.append(current_slice)

+ splits_pages.append(cur_page)

+ splits_start_idxs.append(cur_start_idx)

+

+ if split_overlap:

+ overlap = []

+ processed_sents = []

+ word_count_overlap = 0

+ current_slice_copy = deepcopy(current_slice)

+ for idx, s in reversed(list(enumerate(current_slice))):

+ sen_len = len(s.split())

+ if word_count_overlap < split_overlap:

+ overlap.append(s)

+ word_count_overlap += sen_len

+ current_slice_copy.pop(idx)

+ else:

+ processed_sents = current_slice_copy

+ break

+ current_slice = list(reversed(overlap))

+ word_count_slice = word_count_overlap

+ else:

+ processed_sents = current_slice

+ current_slice = []

+ word_count_slice = 0

+

+ cur_start_idx += len("".join(processed_sents))

+

+ # Count number of page breaks in processed sentences

+ if self.add_page_number:

+ num_page_breaks = self._count_processed_page_breaks(

+ sentences=processed_sents,

+ split_overlap=split_overlap,

+ overlapping_sents=current_slice,

+ current_sent=sen,

+ )

+ cur_page += num_page_breaks

+

+ current_slice.append(sen)

+ word_count_slice += word_count_sen

+

+ if current_slice:

+ list_splits.append(current_slice)

+ splits_pages.append(cur_page)

+ splits_start_idxs.append(cur_start_idx)

+

+ text_splits = []

+ for sl in list_splits:

+ txt = "".join(sl)

+ if len(txt) > 0:

+ text_splits.append(txt)

+

+ return text_splits, splits_pages, splits_start_idxs

+

+ def _split_into_units(self, text: str, split_by: str) -> Tuple[List[str], str]:

+ if split_by == "passage":

+ elements = text.split("\n\n")

+ split_at = "\n\n"

+ elif split_by == "sentence":

+ elements = self._split_sentences(text)

+ split_at = "" # whitespace will be preserved while splitting text into sentences

+ elif split_by == "word":

+ elements = text.split(" ")

+ split_at = " "

+ else:

+ raise NotImplementedError("PreProcessor only supports 'passage', 'sentence' or 'word' split_by options.")

+

+ return elements, split_at

+

+ def _concatenate_units(

+ self, elements: List[str], split_length: int, split_overlap: int, split_at: str

+ ) -> Tuple[List[str], List[int], List[int]]:

+ """

+ Concatenates the elements into parts of split_length units.

+ """

+ segments = windowed(elements, n=split_length, step=split_length - split_overlap)

+ split_at_len = len(split_at)

+ text_splits = []

+ splits_pages = []

+ splits_start_idxs = []

+ cur_page = 1

+ cur_start_idx = 0

+ for seg in segments:

+ current_units = [unit for unit in seg if unit is not None]

+ txt = split_at.join(current_units)

+ if len(txt) > 0:

+ text_splits.append(txt)

+ splits_pages.append(cur_page)

+ splits_start_idxs.append(cur_start_idx)

+ processed_units = current_units[: split_length - split_overlap]

+ cur_start_idx += len((split_at_len * " ").join(processed_units)) + split_at_len

+ if self.add_page_number:

+ num_page_breaks = sum(processed_unit.count("\f") for processed_unit in processed_units)

+ cur_page += num_page_breaks

+

+ return text_splits, splits_pages, splits_start_idxs

+

+ def _create_docs_from_splits(

+ self,

+ text_splits: List[str],

+ splits_pages: List[int],

+ splits_start_idxs: List[int],

+ headlines: List[Dict],

+ meta: Dict,

+ id_hash_keys=Optional[List[str]],

+ ) -> List[Document]:

+ """

+ Creates Document objects from text splits enriching them with page number and headline information if given.

+ """

documents = []

- for i, txt in enumerate(text_splits):

- # now we want to get rid of the empty page placeholder and skip the split if there's nothing left

- txt_clean = txt.replace(EMPTY_PAGE_PLACEHOLDER, "")

- if not txt_clean.strip():

- continue

- doc = Document(content=txt_clean, meta=deepcopy(document.meta) or {}, id_hash_keys=id_hash_keys)

+ earliest_rel_hl = 0

+ for i, txt in enumerate(text_splits):

+ meta = deepcopy(meta)

+ doc = Document(content=txt, meta=meta, id_hash_keys=id_hash_keys)

doc.meta["_split_id"] = i

if self.add_page_number:

doc.meta["page"] = splits_pages[i]

+ if headlines:

+ split_start_idx = splits_start_idxs[i]

+ relevant_headlines, earliest_rel_hl = self._extract_relevant_headlines_for_split(

+ headlines=headlines, split_txt=txt, split_start_idx=split_start_idx, earliest_rel_hl=earliest_rel_hl

+ )

+ doc.meta["headlines"] = relevant_headlines

+

documents.append(doc)

return documents

+ @staticmethod

+ def _extract_relevant_headlines_for_split(

+ headlines: List[Dict], split_txt: str, split_start_idx: int, earliest_rel_hl: int

+ ) -> Tuple[List[Dict], int]:

+ """

+ If you give it a list of headlines, a text split, and the start index of the split in the original text, this method

+ extracts the headlines that are relevant for the split.

+ """

+ relevant_headlines = []

+

+ for headline_idx in range(earliest_rel_hl, len(headlines)):

+ # Headline is part of current split

+ if split_start_idx <= headlines[headline_idx]["start_idx"] < split_start_idx + len(split_txt):

+ headline_copy = deepcopy(headlines[headline_idx])

+ headline_copy["start_idx"] = headlines[headline_idx]["start_idx"] - split_start_idx

+ relevant_headlines.append(headline_copy)

+ # Headline appears before current split, but might be relevant for current split

+ elif headlines[headline_idx]["start_idx"] < split_start_idx:

+ # Check if following headlines are on a higher level

+ headline_to_check = headline_idx + 1

+ headline_is_relevant = True

+ while (

+ headline_to_check < len(headlines) and headlines[headline_to_check]["start_idx"] <= split_start_idx

+ ):

+ if headlines[headline_to_check]["level"] <= headlines[headline_idx]["level"]:

+ headline_is_relevant = False

+ break

+ headline_to_check += 1

+ if headline_is_relevant:

+ headline_copy = deepcopy(headlines[headline_idx])

+ headline_copy["start_idx"] = None

+ relevant_headlines.append(headline_copy)

+ else:

+ earliest_rel_hl += 1

+ # Headline (and all subsequent ones) only relevant for later splits

+ elif headlines[headline_idx]["start_idx"] > split_start_idx + len(split_txt):

+ break

+

+ return relevant_headlines, earliest_rel_hl

+

def _find_and_remove_header_footer(

self, text: str, n_chars: int, n_first_pages_to_ignore: int, n_last_pages_to_ignore: int

) -> str:

@@ -542,46 +698,74 @@ def _split_sentences(self, text: str) -> List[str]:

:param text: str, text to tokenize

:return: list[str], list of sentences

"""

- sentences = []

-

language_name = iso639_to_nltk.get(self.language)

+ sentence_tokenizer = self._load_sentence_tokenizer(language_name)

+ # The following adjustment of PunktSentenceTokenizer is inspired by:

+ # https://stackoverflow.com/questions/33139531/preserve-empty-lines-with-nltks-punkt-tokenizer

+ # It is needed for preserving whitespace while splitting text into sentences.

+ period_context_fmt = r"""

+ %(SentEndChars)s # a potential sentence ending

+ \s* # match potential whitespace (is originally in lookahead assertion)

+ (?=(?P<after_tok>

+ %(NonWord)s # either other punctuation

+ |

+ (?P<next_tok>\S+) # or some other token - original version: \s+(?P<next_tok>\S+)

+ ))"""

+ re_period_context = re.compile(

+ period_context_fmt

+ % {

+ "NonWord": sentence_tokenizer._lang_vars._re_non_word_chars,

+ "SentEndChars": sentence_tokenizer._lang_vars._re_sent_end_chars,

+ },

+ re.UNICODE | re.VERBOSE,

+ )

+ sentence_tokenizer._lang_vars._re_period_context = re_period_context

+

+ sentences = sentence_tokenizer.tokenize(text)

+ return sentences

+

+ def _load_sentence_tokenizer(self, language_name: Optional[str]) -> nltk.tokenize.punkt.PunktSentenceTokenizer:

+

# Try to load a custom model from 'tokenizer_model_path'

if self.tokenizer_model_folder is not None:

tokenizer_model_path = Path(self.tokenizer_model_folder).absolute() / f"{self.language}.pickle"

try:

sentence_tokenizer = nltk.data.load(f"file:{str(tokenizer_model_path)}", format="pickle")

- sentences = sentence_tokenizer.tokenize(text)

- except LookupError:

- logger.exception("PreProcessor couldn't load sentence tokenizer from %s", tokenizer_model_path)

- except (UnpicklingError, ValueError) as e:

- logger.exception(

- "PreProcessor couldn't determine model format of sentence tokenizer at %s", tokenizer_model_path

- )

- if sentences:

- return sentences

-

- # NLTK failed to split, fallback to the default model or to English

- if language_name is not None:

- logger.error(

- f"PreProcessor couldn't find custom sentence tokenizer model for {self.language}. Using default {self.language} model."

- )

- return nltk.tokenize.sent_tokenize(text, language=language_name)

+ except (LookupError, UnpicklingError, ValueError) as e:

+ if isinstance(e, LookupError):

+ logger.exception(f"PreProcessor couldn't load sentence tokenizer from %s", tokenizer_model_path)

+ else:

+ logger.exception(

+ f"PreProcessor couldn't determine model format of sentence tokenizer at %s",

+ tokenizer_model_path,

+ )

+

+ # NLTK failed to load custom SentenceTokenizer, fallback to the default model or to English

+ if language_name is not None:

+ logger.error(

+ f"PreProcessor couldn't find custom sentence tokenizer model for {self.language}. "

+ f"Using default {self.language} model."

+ )

+ sentence_tokenizer = nltk.data.load(f"tokenizers/punkt/{language_name}.pickle")

+ else:

+ logger.error(

+ f"PreProcessor couldn't find default or custom sentence tokenizer model for {self.language}. "

+ f"Using English instead."

+ )

+ sentence_tokenizer = nltk.data.load(f"tokenizers/punkt/english.pickle")

+ # Use a default NLTK model

+ elif language_name is not None:

+ sentence_tokenizer = nltk.data.load(f"tokenizers/punkt/{language_name}.pickle")

+ else:

logger.error(

- f"PreProcessor couldn't find default or custom sentence tokenizer model for {self.language}. Using English instead."

+ f"PreProcessor couldn't find the default sentence tokenizer model for {self.language}. "

+ f" Using English instead. You may train your own model and use the 'tokenizer_model_folder' parameter."

)

- return nltk.tokenize.sent_tokenize(text, language="english")

-

- # Use a default NLTK model

- if language_name is not None:

- return nltk.tokenize.sent_tokenize(text, language=language_name)

+ sentence_tokenizer = nltk.data.load(f"tokenizers/punkt/english.pickle")

- logger.error(

- f"PreProcessor couldn't find default sentence tokenizer model for {self.language}. Using English instead. "

- "You may train your own model and use the 'tokenizer_model_folder' parameter."

- )

- return nltk.tokenize.sent_tokenize(text, language="english")

+ return sentence_tokenizer

@staticmethod

def _count_processed_page_breaks(

@@ -603,13 +787,3 @@ def _count_processed_page_breaks(

num_page_breaks += 1

return num_page_breaks

-

- @staticmethod

- def _substitute_page_breaks(text: str) -> str:

- """

- This method substitutes the page break character "\f" for "[NEW_PAGE]" if it is at the end of a sentence.

- """

- # This regex matches any of sentence-ending punctuation (one of ".", ":", "?", "!") followed by a page break

- # character ("\f") and replaces the page break character with "[NEW_PAGE]" keeping the original sentence-ending

- # punctuation.

- return re.sub(r"([\.:?!])\f", r"\1 [NEW_PAGE]", text)

|

diff --git a/test/nodes/test_file_converter.py b/test/nodes/test_file_converter.py

index 0f6d095c3f..1cffe6b552 100644

--- a/test/nodes/test_file_converter.py

+++ b/test/nodes/test_file_converter.py

@@ -141,6 +141,31 @@ def test_markdown_converter():

assert document.content.startswith("What to build with Haystack")

+def test_markdown_converter_headline_extraction():

+ expected_headlines = [

+ ("What to build with Haystack", 1),

+ ("Core Features", 1),

+ ("Quick Demo", 1),

+ ("2nd level headline for testing purposes", 2),

+ ("3rd level headline for testing purposes", 3),

+ ]

+

+ converter = MarkdownConverter(extract_headlines=True, remove_code_snippets=False)

+ document = converter.convert(file_path=SAMPLES_PATH / "markdown" / "sample.md")[0]

+

+ # Check if correct number of headlines are extracted

+ assert len(document.meta["headlines"]) == 5

+ for extracted_headline, (expected_headline, expected_level) in zip(document.meta["headlines"], expected_headlines):

+ # Check if correct headline and level is extracted

+ assert extracted_headline["headline"] == expected_headline

+ assert extracted_headline["level"] == expected_level

+

+ # Check if correct start_idx is extracted

+ start_idx = extracted_headline["start_idx"]

+ hl_len = len(extracted_headline["headline"])

+ assert extracted_headline["headline"] == document.content[start_idx : start_idx + hl_len]

+

+

def test_azure_converter():

# Check if Form Recognizer endpoint and credential key in environment variables

if "AZURE_FORMRECOGNIZER_ENDPOINT" in os.environ and "AZURE_FORMRECOGNIZER_KEY" in os.environ:

diff --git a/test/nodes/test_preprocessor.py b/test/nodes/test_preprocessor.py

index 91f2e9ad6d..7b933bc576 100644

--- a/test/nodes/test_preprocessor.py

+++ b/test/nodes/test_preprocessor.py

@@ -26,6 +26,15 @@

in the sentence.

"""

+HEADLINES = [

+ {"headline": "sample sentence in paragraph_1", "start_idx": 11, "level": 0},

+ {"headline": "paragraph_1", "start_idx": 198, "level": 1},

+ {"headline": "sample sentence in paragraph_2", "start_idx": 223, "level": 0},

+ {"headline": "in paragraph_2", "start_idx": 365, "level": 1},

+ {"headline": "sample sentence in paragraph_3", "start_idx": 434, "level": 0},

+ {"headline": "trick the test", "start_idx": 603, "level": 1},

+]

+

LEGAL_TEXT_PT = """

A Lei nº 9.514/1997, que instituiu a alienação fiduciária de

bens imóveis, é norma especial e posterior ao Código de Defesa do

@@ -124,8 +133,8 @@ def test_preprocess_word_split():

documents = preprocessor.process(document)

for i, doc in enumerate(documents):

if i == 0:

- assert len(doc.content.split(" ")) == 14

- assert len(doc.content.split(" ")) <= 15 or doc.content.startswith("This is to trick")

+ assert len(doc.content.split()) == 14

+ assert len(doc.content.split()) <= 15 or doc.content.startswith("This is to trick")

assert len(documents) == 8

preprocessor = PreProcessor(

@@ -244,9 +253,217 @@ def test_page_number_extraction_on_empty_pages():

assert documents[1].content.strip() == text_page_three

-def test_substitute_page_break():

- # Page breaks at the end of sentences should be replaced by "[NEW_PAGE]", while page breaks in between of

- # sentences should not be replaced.

- result = PreProcessor._substitute_page_breaks(TEXT)

- assert result[211:221] == "[NEW_PAGE]"

- assert result[654] == "\f"

+def test_headline_processing_split_by_word():

+ expected_headlines = [

+ [{"headline": "sample sentence in paragraph_1", "start_idx": 11, "level": 0}],

+ [

+ {"headline": "sample sentence in paragraph_1", "start_idx": None, "level": 0},

+ {"headline": "paragraph_1", "start_idx": 19, "level": 1},

+ {"headline": "sample sentence in paragraph_2", "start_idx": 44, "level": 0},

+ {"headline": "in paragraph_2", "start_idx": 186, "level": 1},

+ ],

+ [

+ {"headline": "sample sentence in paragraph_2", "start_idx": None, "level": 0},

+ {"headline": "in paragraph_2", "start_idx": None, "level": 1},

+ {"headline": "sample sentence in paragraph_3", "start_idx": 53, "level": 0},

+ ],

+ [

+ {"headline": "sample sentence in paragraph_3", "start_idx": None, "level": 0},

+ {"headline": "trick the test", "start_idx": 36, "level": 1},

+ ],

+ ]

+

+ document = Document(content=TEXT, meta={"headlines": HEADLINES})

+ preprocessor = PreProcessor(

+ split_length=30, split_overlap=0, split_by="word", split_respect_sentence_boundary=False

+ )

+ documents = preprocessor.process(document)

+

+ for doc, expected in zip(documents, expected_headlines):

+ assert doc.meta["headlines"] == expected

+

+

+def test_headline_processing_split_by_word_overlap():

+ expected_headlines = [

+ [{"headline": "sample sentence in paragraph_1", "start_idx": 11, "level": 0}],

+ [

+ {"headline": "sample sentence in paragraph_1", "start_idx": None, "level": 0},

+ {"headline": "paragraph_1", "start_idx": 71, "level": 1},

+ {"headline": "sample sentence in paragraph_2", "start_idx": 96, "level": 0},

+ ],

+ [

+ {"headline": "sample sentence in paragraph_2", "start_idx": None, "level": 0},