Spaces:

Sleeping

Sleeping

T Le

commited on

Commit

·

84ed651

1

Parent(s):

f8d9363

Update merge with main branch

Browse files- pages/1 Scattertext.py +40 -1

- pages/10 WordCloud.py +45 -4

- pages/2 Topic Modeling.py +55 -0

- pages/3 Bidirected Network.py +49 -0

- pages/4 Sunburst.py +42 -6

- pages/5 Burst Detection.py +49 -0

- pages/6 Keywords Stem.py +49 -0

- pages/7 Sentiment Analysis.py +43 -0

- pages/8 Shifterator.py +36 -1

- pages/9 Summarization.py +33 -1

pages/1 Scattertext.py

CHANGED

|

@@ -43,7 +43,46 @@ with st.popover("🔗 Menu"):

|

|

| 43 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 44 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 45 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 46 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 47 |

st.header("Scattertext", anchor=False)

|

| 48 |

st.subheader('Put your file here...', anchor=False)

|

| 49 |

|

|

|

|

| 43 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 44 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 45 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 46 |

+

|

| 47 |

+

with st.expander("Before you start", expanded = True):

|

| 48 |

+

|

| 49 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 50 |

+

with tab1:

|

| 51 |

+

st.write("Scattertext is an open-source tool designed to visualize linguistic variations between document categories in a language-independent way. It presents a scatterplot, with each axis representing the rank-frequency of a term's occurrence within a category of documents.")

|

| 52 |

+

st.divider()

|

| 53 |

+

st.write('💡 The idea came from this:')

|

| 54 |

+

st.write('Kessler, J. S. (2017). Scattertext: a Browser-Based Tool for Visualizing how Corpora Differ. https://doi.org/10.48550/arXiv.1703.00565')

|

| 55 |

+

|

| 56 |

+

with tab2:

|

| 57 |

+

st.text("1. Put your file. Choose your preferred column to analyze.")

|

| 58 |

+

st.text("2. Choose your preferred method to compare and decide words you want to remove.")

|

| 59 |

+

st.text("3. Finally, you can visualize your data.")

|

| 60 |

+

st.error("This app includes lemmatization and stopwords. Currently, we only offer English words.", icon="💬")

|

| 61 |

+

|

| 62 |

+

with tab3:

|

| 63 |

+

st.code("""

|

| 64 |

+

+----------------+------------------------+----------------------------------+

|

| 65 |

+

| Source | File Type | Column |

|

| 66 |

+

+----------------+------------------------+----------------------------------+

|

| 67 |

+

| Scopus | Comma-separated values | Choose your preferred column |

|

| 68 |

+

| | (.csv) | that you have |

|

| 69 |

+

+----------------+------------------------| |

|

| 70 |

+

| Web of Science | Tab delimited file | |

|

| 71 |

+

| | (.txt) | |

|

| 72 |

+

+----------------+------------------------| |

|

| 73 |

+

| Lens.org | Comma-separated values | |

|

| 74 |

+

| | (.csv) | |

|

| 75 |

+

+----------------+------------------------| |

|

| 76 |

+

| Other | .csv | |

|

| 77 |

+

+----------------+------------------------| |

|

| 78 |

+

| Hathitrust | .json | |

|

| 79 |

+

+----------------+------------------------+----------------------------------+

|

| 80 |

+

""", language=None)

|

| 81 |

+

|

| 82 |

+

with tab4:

|

| 83 |

+

st.subheader(':blue[Scattertext]', anchor=False)

|

| 84 |

+

st.write("Click the :blue[Download SVG] on the right side.")

|

| 85 |

+

|

| 86 |

st.header("Scattertext", anchor=False)

|

| 87 |

st.subheader('Put your file here...', anchor=False)

|

| 88 |

|

pages/10 WordCloud.py

CHANGED

|

@@ -3,6 +3,11 @@ import pandas as pd

|

|

| 3 |

import matplotlib.pyplot as plt

|

| 4 |

from wordcloud import WordCloud

|

| 5 |

from tools import sourceformat as sf

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

|

| 7 |

# ===config===

|

| 8 |

st.set_page_config(

|

|

@@ -34,7 +39,39 @@ with st.popover("🔗 Menu"):

|

|

| 34 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 35 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 36 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 37 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 38 |

st.header("Wordcloud", anchor=False)

|

| 39 |

st.subheader('Put your file here...', anchor=False)

|

| 40 |

|

|

@@ -126,7 +163,7 @@ if uploaded_file is not None:

|

|

| 126 |

|

| 127 |

with c2:

|

| 128 |

words_to_remove = st.text_input("Remove specific words. Separate words by semicolons (;)")

|

| 129 |

-

|

| 130 |

image_width = st.number_input("Image width", value = 400)

|

| 131 |

image_height = st.number_input("Image height", value = 200)

|

| 132 |

scale = st.number_input("Scale", value = 1)

|

|

@@ -140,6 +177,8 @@ if uploaded_file is not None:

|

|

| 140 |

texts = conv_txt(uploaded_file)

|

| 141 |

colcho = c1.selectbox("Choose Column", list(texts))

|

| 142 |

fulltext = " ".join(list(texts[colcho]))

|

|

|

|

|

|

|

| 143 |

|

| 144 |

except:

|

| 145 |

fulltext = read_txt(uploaded_file)

|

|

@@ -150,7 +189,7 @@ if uploaded_file is not None:

|

|

| 150 |

wordcloud = WordCloud(max_font_size = max_font,

|

| 151 |

max_words = max_words,

|

| 152 |

background_color=background,

|

| 153 |

-

stopwords =

|

| 154 |

height = image_height,

|

| 155 |

width = image_width,

|

| 156 |

scale = scale).generate(fulltext)

|

|

@@ -165,13 +204,15 @@ if uploaded_file is not None:

|

|

| 165 |

colcho = c1.selectbox("Choose Column", list(texts))

|

| 166 |

|

| 167 |

fullcolumn = " ".join(list(texts[colcho]))

|

|

|

|

|

|

|

| 168 |

|

| 169 |

if st.button("Submit"):

|

| 170 |

|

| 171 |

wordcloud = WordCloud(max_font_size = max_font,

|

| 172 |

max_words = max_words,

|

| 173 |

background_color=background,

|

| 174 |

-

stopwords =

|

| 175 |

height = image_height,

|

| 176 |

width = image_width,

|

| 177 |

scale = scale).generate(fullcolumn)

|

|

|

|

| 3 |

import matplotlib.pyplot as plt

|

| 4 |

from wordcloud import WordCloud

|

| 5 |

from tools import sourceformat as sf

|

| 6 |

+

import nltk

|

| 7 |

+

from nltk.corpus import stopwords

|

| 8 |

+

from gensim.parsing.preprocessing import remove_stopwords

|

| 9 |

+

nltk.download('stopwords')

|

| 10 |

+

|

| 11 |

|

| 12 |

# ===config===

|

| 13 |

st.set_page_config(

|

|

|

|

| 39 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 40 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 41 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 42 |

+

|

| 43 |

+

with st.expander("Before you start", expanded = True):

|

| 44 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 45 |

+

with tab1:

|

| 46 |

+

st.write("")

|

| 47 |

+

|

| 48 |

+

with tab2:

|

| 49 |

+

st.text("1. Put your file. Choose your preferred column to analyze (if CSV).")

|

| 50 |

+

st.text("2. Choose your preferred method to count the words and decide how many top words you want to include or remove.")

|

| 51 |

+

st.text("3. Finally, you can visualize your data.")

|

| 52 |

+

st.error("This app includes lemmatization and stopwords. Currently, we only offer English words.", icon="💬")

|

| 53 |

+

|

| 54 |

+

with tab3:

|

| 55 |

+

st.code("""

|

| 56 |

+

+----------------+------------------------+

|

| 57 |

+

| Source | File Type |

|

| 58 |

+

+----------------+------------------------+

|

| 59 |

+

| Scopus | Comma-separated values |

|

| 60 |

+

| | (.csv) |

|

| 61 |

+

+----------------+------------------------|

|

| 62 |

+

| Lens.org | Comma-separated values |

|

| 63 |

+

| | (.csv) |

|

| 64 |

+

+----------------+------------------------|

|

| 65 |

+

| Other | .csv/ .txt(full text) |

|

| 66 |

+

+----------------+------------------------|

|

| 67 |

+

| Hathitrust | .json |

|

| 68 |

+

+----------------+------------------------+

|

| 69 |

+

""", language=None)

|

| 70 |

+

|

| 71 |

+

with tab4:

|

| 72 |

+

st.subheader(':blue[WordCloud Download]', anchor=False)

|

| 73 |

+

st.write("Right-click image and click \"Save-as\"")

|

| 74 |

+

|

| 75 |

st.header("Wordcloud", anchor=False)

|

| 76 |

st.subheader('Put your file here...', anchor=False)

|

| 77 |

|

|

|

|

| 163 |

|

| 164 |

with c2:

|

| 165 |

words_to_remove = st.text_input("Remove specific words. Separate words by semicolons (;)")

|

| 166 |

+

filterwords = words_to_remove.split(';')

|

| 167 |

image_width = st.number_input("Image width", value = 400)

|

| 168 |

image_height = st.number_input("Image height", value = 200)

|

| 169 |

scale = st.number_input("Scale", value = 1)

|

|

|

|

| 177 |

texts = conv_txt(uploaded_file)

|

| 178 |

colcho = c1.selectbox("Choose Column", list(texts))

|

| 179 |

fulltext = " ".join(list(texts[colcho]))

|

| 180 |

+

fulltext = remove_stopwords(fulltext)

|

| 181 |

+

|

| 182 |

|

| 183 |

except:

|

| 184 |

fulltext = read_txt(uploaded_file)

|

|

|

|

| 189 |

wordcloud = WordCloud(max_font_size = max_font,

|

| 190 |

max_words = max_words,

|

| 191 |

background_color=background,

|

| 192 |

+

stopwords = filterwords,

|

| 193 |

height = image_height,

|

| 194 |

width = image_width,

|

| 195 |

scale = scale).generate(fulltext)

|

|

|

|

| 204 |

colcho = c1.selectbox("Choose Column", list(texts))

|

| 205 |

|

| 206 |

fullcolumn = " ".join(list(texts[colcho]))

|

| 207 |

+

|

| 208 |

+

fullcolumn = remove_stopwords(fullcolumn)

|

| 209 |

|

| 210 |

if st.button("Submit"):

|

| 211 |

|

| 212 |

wordcloud = WordCloud(max_font_size = max_font,

|

| 213 |

max_words = max_words,

|

| 214 |

background_color=background,

|

| 215 |

+

stopwords = filterwords,

|

| 216 |

height = image_height,

|

| 217 |

width = image_width,

|

| 218 |

scale = scale).generate(fullcolumn)

|

pages/2 Topic Modeling.py

CHANGED

|

@@ -78,6 +78,61 @@ with st.popover("🔗 Menu"):

|

|

| 78 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 79 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 80 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 81 |

st.header("Topic Modeling", anchor=False)

|

| 82 |

st.subheader('Put your file here...', anchor=False)

|

| 83 |

|

|

|

|

| 78 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 79 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 80 |

|

| 81 |

+

with st.expander("Before you start", expanded = True):

|

| 82 |

+

|

| 83 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 84 |

+

with tab1:

|

| 85 |

+

st.write("Topic modeling has numerous advantages for librarians in different aspects of their work. A crucial benefit is an ability to quickly organize and categorize a huge volume of textual content found in websites, institutional archives, databases, emails, and reference desk questions. Librarians can use topic modeling approaches to automatically identify the primary themes or topics within these documents, making navigating and retrieving relevant information easier. Librarians can identify and understand the prevailing topics of discussion by analyzing text data with topic modeling tools, allowing them to assess user feedback, tailor their services to meet specific needs and make informed decisions about collection development and resource allocation. Making ontologies, automatic subject classification, recommendation services, bibliometrics, altmetrics, and better resource searching and retrieval are a few examples of topic modeling. To do topic modeling on other text like chats and surveys, change the column name to 'Abstract' in your file.")

|

| 86 |

+

st.divider()

|

| 87 |

+

st.write('💡 The idea came from this:')

|

| 88 |

+

st.write('Lamba, M., & Madhusudhan, M. (2021, July 31). Topic Modeling. Text Mining for Information Professionals, 105–137. https://doi.org/10.1007/978-3-030-85085-2_4')

|

| 89 |

+

|

| 90 |

+

with tab2:

|

| 91 |

+

st.text("1. Put your file. Choose your preferred column.")

|

| 92 |

+

st.text("2. Choose your preferred method. LDA is the most widely used, whereas Biterm is appropriate for short text, and BERTopic works well for large text data as well as supports more than 50+ languages.")

|

| 93 |

+

st.text("3. Finally, you can visualize your data.")

|

| 94 |

+

st.error("This app includes lemmatization and stopwords for the abstract text. Currently, we only offer English words.", icon="💬")

|

| 95 |

+

|

| 96 |

+

with tab3:

|

| 97 |

+

st.code("""

|

| 98 |

+

+----------------+------------------------+----------------------------------+

|

| 99 |

+

| Source | File Type | Column |

|

| 100 |

+

+----------------+------------------------+----------------------------------+

|

| 101 |

+

| Scopus | Comma-separated values | Choose your preferred column |

|

| 102 |

+

| | (.csv) | that you have |

|

| 103 |

+

+----------------+------------------------| |

|

| 104 |

+

| Web of Science | Tab delimited file | |

|

| 105 |

+

| | (.txt) | |

|

| 106 |

+

+----------------+------------------------| |

|

| 107 |

+

| Lens.org | Comma-separated values | |

|

| 108 |

+

| | (.csv) | |

|

| 109 |

+

+----------------+------------------------| |

|

| 110 |

+

| Other | .csv | |

|

| 111 |

+

+----------------+------------------------| |

|

| 112 |

+

| Hathitrust | .json | |

|

| 113 |

+

+----------------+------------------------+----------------------------------+

|

| 114 |

+

""", language=None)

|

| 115 |

+

|

| 116 |

+

with tab4:

|

| 117 |

+

st.subheader(':blue[pyLDA]', anchor=False)

|

| 118 |

+

st.button('Download image')

|

| 119 |

+

st.text("Click Download Image button.")

|

| 120 |

+

|

| 121 |

+

st.divider()

|

| 122 |

+

st.subheader(':blue[Biterm]', anchor=False)

|

| 123 |

+

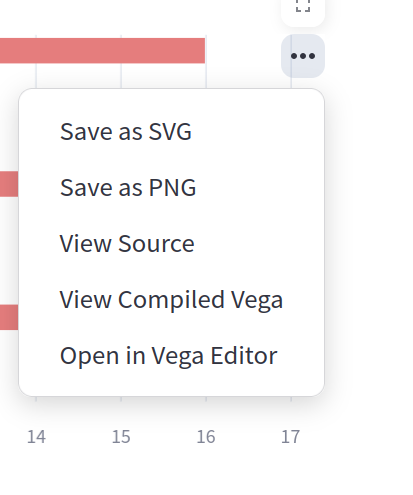

st.text("Click the three dots at the top right then select the desired format.")

|

| 124 |

+

st.markdown("")

|

| 125 |

+

|

| 126 |

+

st.divider()

|

| 127 |

+

st.subheader(':blue[BERTopic]', anchor=False)

|

| 128 |

+

st.text("Click the camera icon on the top right menu")

|

| 129 |

+

st.markdown("")

|

| 130 |

+

|

| 131 |

+

st.divider()

|

| 132 |

+

st.subheader(':blue[CSV Result]', anchor=False)

|

| 133 |

+

st.text("Click Download button")

|

| 134 |

+

st.button('Download Results')

|

| 135 |

+

|

| 136 |

st.header("Topic Modeling", anchor=False)

|

| 137 |

st.subheader('Put your file here...', anchor=False)

|

| 138 |

|

pages/3 Bidirected Network.py

CHANGED

|

@@ -59,6 +59,55 @@ with st.popover("🔗 Menu"):

|

|

| 59 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 60 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 61 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 62 |

st.header("Bidirected Network", anchor=False)

|

| 63 |

st.subheader('Put your file here...', anchor=False)

|

| 64 |

|

|

|

|

| 59 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 60 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 61 |

|

| 62 |

+

with st.expander("Before you start", expanded = True):

|

| 63 |

+

|

| 64 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Graph"])

|

| 65 |

+

with tab1:

|

| 66 |

+

st.write("The use of network text analysis by librarians can be quite beneficial. Finding hidden correlations and connections in textual material is a significant advantage. Using network text analysis tools, librarians can improve knowledge discovery, obtain deeper insights, and support scholars meaningfully, ultimately enhancing the library's services and resources. This menu provides a two-way relationship instead of the general network of relationships to enhance the co-word analysis. Since it is based on ARM, you may obtain transactional data information using this menu. Please name the column in your file 'Keyword' instead.")

|

| 67 |

+

st.divider()

|

| 68 |

+

st.write('💡 The idea came from this:')

|

| 69 |

+

st.write('Santosa, F. A. (2023). Adding Perspective to the Bibliometric Mapping Using Bidirected Graph. Open Information Science, 7(1), 20220152. https://doi.org/10.1515/opis-2022-0152')

|

| 70 |

+

|

| 71 |

+

with tab2:

|

| 72 |

+

st.text("1. Put your file.")

|

| 73 |

+

st.text("2. Choose your preferable method. Picture below may help you to choose wisely.")

|

| 74 |

+

st.markdown("")

|

| 75 |

+

st.text('Source: https://studymachinelearning.com/stemming-and-lemmatization/')

|

| 76 |

+

st.text("3. Choose the value of Support and Confidence. If you're not sure how to use it please read the article above or just try it!")

|

| 77 |

+

st.text("4. You can see the table and a simple visualization before making a network visualization.")

|

| 78 |

+

st.text('5. Click "Generate network visualization" to see the network')

|

| 79 |

+

st.error("The more data on your table, the more you'll see on network.", icon="🚨")

|

| 80 |

+

st.error("If the table contains many rows, the network will take more time to process. Please use it efficiently.", icon="⌛")

|

| 81 |

+

|

| 82 |

+

with tab3:

|

| 83 |

+

st.code("""

|

| 84 |

+

+----------------+------------------------+---------------------------------+

|

| 85 |

+

| Source | File Type | Column |

|

| 86 |

+

+----------------+------------------------+---------------------------------+

|

| 87 |

+

| Scopus | Comma-separated values | Author Keywords |

|

| 88 |

+

| | (.csv) | Index Keywords |

|

| 89 |

+

+----------------+------------------------+---------------------------------+

|

| 90 |

+

| Web of Science | Tab delimited file | Author Keywords |

|

| 91 |

+

| | (.txt) | Keywords Plus |

|

| 92 |

+

+----------------+------------------------+---------------------------------+

|

| 93 |

+

| Lens.org | Comma-separated values | Keywords (Scholarly Works) |

|

| 94 |

+

| | (.csv) | |

|

| 95 |

+

+----------------+------------------------+---------------------------------+

|

| 96 |

+

| Dimensions | Comma-separated values | MeSH terms |

|

| 97 |

+

| | (.csv) | |

|

| 98 |

+

+----------------+------------------------+---------------------------------+

|

| 99 |

+

| Other | .csv | Change your column to 'Keyword' |

|

| 100 |

+

| | | and separate the words with ';' |

|

| 101 |

+

+----------------+------------------------+---------------------------------+

|

| 102 |

+

| Hathitrust | .json | htid (Hathitrust ID) |

|

| 103 |

+

+----------------+------------------------+---------------------------------+

|

| 104 |

+

""", language=None)

|

| 105 |

+

|

| 106 |

+

with tab4:

|

| 107 |

+

st.subheader(':blue[Bidirected Network]', anchor=False)

|

| 108 |

+

st.text("Zoom in, zoom out, or shift the nodes as desired, then right-click and select Save image as ...")

|

| 109 |

+

st.markdown("")

|

| 110 |

+

|

| 111 |

st.header("Bidirected Network", anchor=False)

|

| 112 |

st.subheader('Put your file here...', anchor=False)

|

| 113 |

|

pages/4 Sunburst.py

CHANGED

|

@@ -37,9 +37,44 @@ with st.popover("🔗 Menu"):

|

|

| 37 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 38 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 39 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 40 |

-

|

| 41 |

-

st.

|

| 42 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

|

| 44 |

#===clear cache===

|

| 45 |

def reset_all():

|

|

@@ -160,6 +195,7 @@ if uploaded_file is not None:

|

|

| 160 |

stitle = st.selectbox('Focus on', (list_stitle), index=None, on_change=reset_all)

|

| 161 |

|

| 162 |

if (GAP != 0):

|

|

|

|

| 163 |

YEAR = st.slider('Year', min_value=MIN, max_value=MAX, value=(MIN, MAX))

|

| 164 |

KEYLIM = st.slider('Cited By Count',min_value = MIN1, max_value = MAX1, value = (MIN1,MAX1))

|

| 165 |

with st.expander("Filtering setings"):

|

|

@@ -185,7 +221,7 @@ if uploaded_file is not None:

|

|

| 185 |

return years, papers

|

| 186 |

|

| 187 |

@st.cache_data(ttl=3600)

|

| 188 |

-

def

|

| 189 |

data = papers.copy()

|

| 190 |

data['Cited by'] = data['Cited by'].fillna(0)

|

| 191 |

|

|

@@ -205,7 +241,7 @@ if uploaded_file is not None:

|

|

| 205 |

color_continuous_scale='RdBu',

|

| 206 |

color_continuous_midpoint=np.average(viz['cited by'], weights=viz['total docs']))

|

| 207 |

fig.update_layout(height=800, width=1200)

|

| 208 |

-

return fig

|

| 209 |

|

| 210 |

years, papers = listyear(extype)

|

| 211 |

|

|

@@ -213,7 +249,7 @@ if uploaded_file is not None:

|

|

| 213 |

if {'Document Type','Source title','Cited by','Year'}.issubset(papers.columns):

|

| 214 |

|

| 215 |

if st.button("Submit", on_click = reset_all):

|

| 216 |

-

fig, viz =

|

| 217 |

st.plotly_chart(fig, height=800, width=1200) #use_container_width=True)

|

| 218 |

st.dataframe(viz)

|

| 219 |

|

|

|

|

| 37 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 38 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 39 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 40 |

+

|

| 41 |

+

with st.expander("Before you start", expanded = True):

|

| 42 |

+

|

| 43 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 44 |

+

with tab1:

|

| 45 |

+

st.write("Sunburst's ability to present a thorough and intuitive picture of complex hierarchical data is an essential benefit. Librarians can easily browse and grasp the relationships between different levels of the hierarchy by employing sunburst visualizations. Sunburst visualizations can also be interactive, letting librarians and users drill down into certain categories or subcategories for further information. This interactive and visually appealing depiction improves the librarian's understanding of the collection and provides users with an engaging and user-friendly experience, resulting in improved information retrieval and decision-making.")

|

| 46 |

+

|

| 47 |

+

with tab2:

|

| 48 |

+

st.text("1. Put your CSV file.")

|

| 49 |

+

st.text("2. You can set the range of years to see how it changed.")

|

| 50 |

+

st.text("3. The sunburst has 3 levels. The inner circle is the type of data, meanwhile, the middle is the source title and the outer is the year the article was published.")

|

| 51 |

+

st.text("4. The size of the slice depends on total documents. The average of inner and middle levels is calculated by formula below:")

|

| 52 |

+

st.code('avg = sum(a * weights) / sum(weights)', language='python')

|

| 53 |

+

|

| 54 |

+

with tab3:

|

| 55 |

+

st.code("""

|

| 56 |

+

+----------------+------------------------+--------------------+

|

| 57 |

+

| Source | File Type | Column |

|

| 58 |

+

+----------------+------------------------+--------------------+

|

| 59 |

+

| Scopus | Comma-separated values | Source title, |

|

| 60 |

+

| | (.csv) | Document Type, |

|

| 61 |

+

+----------------+------------------------| Cited by, Year |

|

| 62 |

+

| Web of Science | Tab delimited file | |

|

| 63 |

+

| | (.txt) | |

|

| 64 |

+

+----------------+------------------------+--------------------+

|

| 65 |

+

| Lens.org | Comma-separated values | Publication Year, |

|

| 66 |

+

| | (.csv) | Publication Type, |

|

| 67 |

+

| | | Source Title, |

|

| 68 |

+

| | | Citing Works Count |

|

| 69 |

+

+----------------+------------------------+--------------------+

|

| 70 |

+

| Hathitrust | .json | htid(Hathitrust ID)|

|

| 71 |

+

+----------------+------------------------+--------------------+

|

| 72 |

+

""", language=None)

|

| 73 |

+

|

| 74 |

+

with tab4:

|

| 75 |

+

st.subheader(':blue[Sunburst]', anchor=False)

|

| 76 |

+

st.text("Click the camera icon on the top right menu")

|

| 77 |

+

st.markdown("")

|

| 78 |

|

| 79 |

#===clear cache===

|

| 80 |

def reset_all():

|

|

|

|

| 195 |

stitle = st.selectbox('Focus on', (list_stitle), index=None, on_change=reset_all)

|

| 196 |

|

| 197 |

if (GAP != 0):

|

| 198 |

+

col1, col2 = st.columns(2)

|

| 199 |

YEAR = st.slider('Year', min_value=MIN, max_value=MAX, value=(MIN, MAX))

|

| 200 |

KEYLIM = st.slider('Cited By Count',min_value = MIN1, max_value = MAX1, value = (MIN1,MAX1))

|

| 201 |

with st.expander("Filtering setings"):

|

|

|

|

| 221 |

return years, papers

|

| 222 |

|

| 223 |

@st.cache_data(ttl=3600)

|

| 224 |

+

def vis_sunburst(extype):

|

| 225 |

data = papers.copy()

|

| 226 |

data['Cited by'] = data['Cited by'].fillna(0)

|

| 227 |

|

|

|

|

| 241 |

color_continuous_scale='RdBu',

|

| 242 |

color_continuous_midpoint=np.average(viz['cited by'], weights=viz['total docs']))

|

| 243 |

fig.update_layout(height=800, width=1200)

|

| 244 |

+

return fig, viz

|

| 245 |

|

| 246 |

years, papers = listyear(extype)

|

| 247 |

|

|

|

|

| 249 |

if {'Document Type','Source title','Cited by','Year'}.issubset(papers.columns):

|

| 250 |

|

| 251 |

if st.button("Submit", on_click = reset_all):

|

| 252 |

+

fig, viz = vis_sunburst(extype)

|

| 253 |

st.plotly_chart(fig, height=800, width=1200) #use_container_width=True)

|

| 254 |

st.dataframe(viz)

|

| 255 |

|

pages/5 Burst Detection.py

CHANGED

|

@@ -50,6 +50,55 @@ with st.popover("🔗 Menu"):

|

|

| 50 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 51 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 52 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

st.header("Burst Detection", anchor=False)

|

| 54 |

st.subheader('Put your file here...', anchor=False)

|

| 55 |

|

|

|

|

| 50 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 51 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 52 |

|

| 53 |

+

with st.expander("Before you start", expanded = True):

|

| 54 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 55 |

+

with tab1:

|

| 56 |

+

st.write("Burst detection identifies periods when a specific event occurs with unusually high frequency, referred to as 'bursty'. This method can be applied to identify bursts in a continuous stream of events or in discrete groups of events (such as poster title submissions to an annual conference).")

|

| 57 |

+

st.divider()

|

| 58 |

+

st.write('💡 The idea came from this:')

|

| 59 |

+

st.write('Kleinberg, J. (2002). Bursty and hierarchical structure in streams. Knowledge Discovery and Data Mining. https://doi.org/10.1145/775047.775061')

|

| 60 |

+

|

| 61 |

+

with tab2:

|

| 62 |

+

st.text("1. Put your file. Choose your preferred column to analyze.")

|

| 63 |

+

st.text("2. Choose your preferred method to compare.")

|

| 64 |

+

st.text("3. Finally, you can visualize your data.")

|

| 65 |

+

st.error("This app includes lemmatization and stopwords. Currently, we only offer English words.", icon="💬")

|

| 66 |

+

|

| 67 |

+

with tab3:

|

| 68 |

+

st.code("""

|

| 69 |

+

+----------------+------------------------+----------------------------------+

|

| 70 |

+

| Source | File Type | Column |

|

| 71 |

+

+----------------+------------------------+----------------------------------+

|

| 72 |

+

| Scopus | Comma-separated values | Choose your preferred column |

|

| 73 |

+

| | (.csv) | that you have to analyze and |

|

| 74 |

+

+----------------+------------------------| and need a column called "Year" |

|

| 75 |

+

| Web of Science | Tab delimited file | |

|

| 76 |

+

| | (.txt) | |

|

| 77 |

+

+----------------+------------------------| |

|

| 78 |

+

| Lens.org | Comma-separated values | |

|

| 79 |

+

| | (.csv) | |

|

| 80 |

+

+----------------+------------------------| |

|

| 81 |

+

| Other | .csv | |

|

| 82 |

+

+----------------+------------------------| |

|

| 83 |

+

| Hathitrust | .json | |

|

| 84 |

+

+----------------+------------------------+----------------------------------+

|

| 85 |

+

""", language=None)

|

| 86 |

+

|

| 87 |

+

with tab4:

|

| 88 |

+

st.subheader(':blue[Burst Detection]', anchor=False)

|

| 89 |

+

st.button('📊 Download high resolution image')

|

| 90 |

+

st.text("Click download button.")

|

| 91 |

+

|

| 92 |

+

st.divider()

|

| 93 |

+

st.subheader(':blue[Top words]', anchor=False)

|

| 94 |

+

st.button('👉 Press to download list of top words')

|

| 95 |

+

st.text("Click download button.")

|

| 96 |

+

|

| 97 |

+

st.divider()

|

| 98 |

+

st.subheader(':blue[Burst]', anchor=False)

|

| 99 |

+

st.button('👉 Press to download the list of detected bursts')

|

| 100 |

+

st.text("Click download button.")

|

| 101 |

+

|

| 102 |

st.header("Burst Detection", anchor=False)

|

| 103 |

st.subheader('Put your file here...', anchor=False)

|

| 104 |

|

pages/6 Keywords Stem.py

CHANGED

|

@@ -49,6 +49,55 @@ with st.popover("🔗 Menu"):

|

|

| 49 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 50 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 51 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 52 |

|

| 53 |

st.header("Keywords Stem", anchor=False)

|

| 54 |

st.subheader('Put your file here...', anchor=False)

|

|

|

|

| 49 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 50 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 51 |

|

| 52 |

+

with st.expander("Before you start", expanded = True):

|

| 53 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Result"])

|

| 54 |

+

with tab1:

|

| 55 |

+

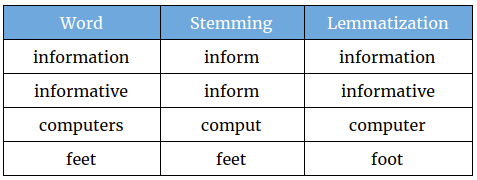

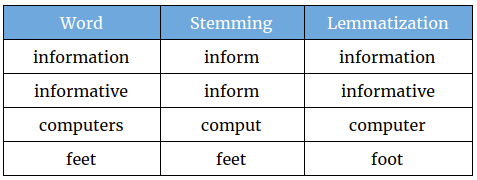

st.write("This approach is effective for locating basic words and aids in catching the true meaning of the word, which can lead to improved semantic analysis and comprehension of the text. Some people find it difficult to check keywords before performing bibliometrics (using software such as VOSviewer and Bibliometrix). This strategy makes it easy to combine and search for fundamental words from keywords, especially if you have a large number of keywords. To do stemming or lemmatization on other text, change the column name to 'Keyword' in your file.")

|

| 56 |

+

st.divider()

|

| 57 |

+

st.write('💡 The idea came from this:')

|

| 58 |

+

st.write('Santosa, F. A. (2022). Prior steps into knowledge mapping: Text mining application and comparison. Issues in Science and Technology Librarianship, 102. https://doi.org/10.29173/istl2736')

|

| 59 |

+

|

| 60 |

+

with tab2:

|

| 61 |

+

st.text("1. Put your file.")

|

| 62 |

+

st.text("2. Choose your preferable method. Picture below may help you to choose wisely.")

|

| 63 |

+

st.markdown("")

|

| 64 |

+

st.text('Source: https://studymachinelearning.com/stemming-and-lemmatization/')

|

| 65 |

+

st.text("3. Now you need to select what kind of keywords you need.")

|

| 66 |

+

st.text("4. Finally, you can download and use the file on VOSviewer, Bibliometrix, or put it on OpenRefine to get better result!")

|

| 67 |

+

st.error("Please check what has changed. It's possible some keywords failed to find their roots.", icon="🚨")

|

| 68 |

+

|

| 69 |

+

with tab3:

|

| 70 |

+

st.code("""

|

| 71 |

+

+----------------+------------------------+---------------------------------+

|

| 72 |

+

| Source | File Type | Column |

|

| 73 |

+

+----------------+------------------------+---------------------------------+

|

| 74 |

+

| Scopus | Comma-separated values | Author Keywords |

|

| 75 |

+

| | (.csv) | Index Keywords |

|

| 76 |

+

+----------------+------------------------+---------------------------------+

|

| 77 |

+

| Web of Science | Tab delimited file | Author Keywords |

|

| 78 |

+

| | (.txt) | Keywords Plus |

|

| 79 |

+

+----------------+------------------------+---------------------------------+

|

| 80 |

+

| Lens.org | Comma-separated values | Keywords (Scholarly Works) |

|

| 81 |

+

| | (.csv) | |

|

| 82 |

+

+----------------+------------------------+---------------------------------+

|

| 83 |

+

| Dimensions | Comma-separated values | MeSH terms |

|

| 84 |

+

| | (.csv) | |

|

| 85 |

+

+----------------+------------------------+---------------------------------+

|

| 86 |

+

| Other | .csv | Change your column to 'Keyword' |

|

| 87 |

+

+----------------+------------------------+---------------------------------+

|

| 88 |

+

| Hathitrust | .json | htid (Hathitrust ID) |

|

| 89 |

+

+----------------+------------------------+---------------------------------+

|

| 90 |

+

""", language=None)

|

| 91 |

+

|

| 92 |

+

with tab4:

|

| 93 |

+

st.subheader(':blue[Result]', anchor=False)

|

| 94 |

+

st.button('Press to download result 👈')

|

| 95 |

+

st.text("Go to Result and click Download button.")

|

| 96 |

+

|

| 97 |

+

st.divider()

|

| 98 |

+

st.subheader(':blue[List of Keywords]', anchor=False)

|

| 99 |

+

st.button('Press to download keywords 👈')

|

| 100 |

+

st.text("Go to List of Keywords and click Download button.")

|

| 101 |

|

| 102 |

st.header("Keywords Stem", anchor=False)

|

| 103 |

st.subheader('Put your file here...', anchor=False)

|

pages/7 Sentiment Analysis.py

CHANGED

|

@@ -50,6 +50,49 @@ with st.popover("🔗 Menu"):

|

|

| 50 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 51 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 52 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

st.header("Sentiment Analysis", anchor=False)

|

| 54 |

st.subheader('Put your file here...', anchor=False)

|

| 55 |

|

|

|

|

| 50 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 51 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 52 |

|

| 53 |

+

with st.expander("Before you start", expanded = True):

|

| 54 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 55 |

+

with tab1:

|

| 56 |

+

st.write('Sentiment analysis uses natural language processing to identify patterns in large text datasets, revealing the writer’s opinions, emotions, and attitudes. It assesses subjectivity (objective vs. subjective), polarity (positive, negative, neutral), and emotions (e.g., anger, joy, sadness, surprise, jealousy).')

|

| 57 |

+

st.divider()

|

| 58 |

+

st.write('💡 The idea came from this:')

|

| 59 |

+

st.write('Lamba, M., & Madhusudhan, M. (2021, July 31). Sentiment Analysis. Text Mining for Information Professionals, 191–211. https://doi.org/10.1007/978-3-030-85085-2_7')

|

| 60 |

+

|

| 61 |

+

with tab2:

|

| 62 |

+

st.write("1. Put your file. Choose your prefered column to analyze")

|

| 63 |

+

st.write("2. Choose your preferred method and decide which words you want to remove")

|

| 64 |

+

st.write("3. Finally, you can visualize your data.")

|

| 65 |

+

|

| 66 |

+

with tab3:

|

| 67 |

+

st.code("""

|

| 68 |

+

+----------------+------------------------+----------------------------------+

|

| 69 |

+

| Source | File Type | Column |

|

| 70 |

+

+----------------+------------------------+----------------------------------+

|

| 71 |

+

| Scopus | Comma-separated values | Choose your preferred column |

|

| 72 |

+

| | (.csv) | that you have |

|

| 73 |

+

+----------------+------------------------| |

|

| 74 |

+

| Web of Science | Tab delimited file | |

|

| 75 |

+

| | (.txt) | |

|

| 76 |

+

+----------------+------------------------| |

|

| 77 |

+

| Lens.org | Comma-separated values | |

|

| 78 |

+

| | (.csv) | |

|

| 79 |

+

+----------------+------------------------| |

|

| 80 |

+

| Other | .csv | |

|

| 81 |

+

+----------------+------------------------| |

|

| 82 |

+

| Hathitrust | .json | |

|

| 83 |

+

+----------------+------------------------+----------------------------------+

|

| 84 |

+

""", language=None)

|

| 85 |

+

|

| 86 |

+

with tab4:

|

| 87 |

+

st.subheader(':blue[Sentiment Analysis]', anchor=False)

|

| 88 |

+

st.write("Click the three dots at the top right then select the desired format")

|

| 89 |

+

st.markdown("")

|

| 90 |

+

st.divider()

|

| 91 |

+

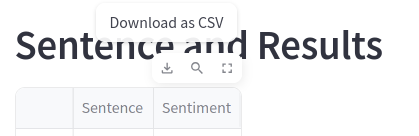

st.subheader(':blue[CSV Results]', anchor=False)

|

| 92 |

+

st.text("Click Download button")

|

| 93 |

+

st.markdown("")

|

| 94 |

+

|

| 95 |

+

|

| 96 |

st.header("Sentiment Analysis", anchor=False)

|

| 97 |

st.subheader('Put your file here...', anchor=False)

|

| 98 |

|

pages/8 Shifterator.py

CHANGED

|

@@ -46,7 +46,42 @@ with st.popover("🔗 Menu"):

|

|

| 46 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 47 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 48 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 49 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 50 |

st.header("Shifterator", anchor=False)

|

| 51 |

st.subheader('Put your file here...', anchor=False)

|

| 52 |

|

|

|

|

| 46 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 47 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 48 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 49 |

+

|

| 50 |

+

with st.expander("Before you start", expanded = True):

|

| 51 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 52 |

+

with tab1:

|

| 53 |

+

st.write("")

|

| 54 |

+

|

| 55 |

+

with tab2:

|

| 56 |

+

st.text("1. Put your file. Choose your preferred column to analyze.")

|

| 57 |

+

st.text("2. Choose your preferred method to count the words and decide how many top words you want to include or remove.")

|

| 58 |

+

st.text("3. Finally, you can visualize your data.")

|

| 59 |

+

st.error("This app includes lemmatization and stopwords. Currently, we only offer English words.", icon="💬")

|

| 60 |

+

|

| 61 |

+

with tab3:

|

| 62 |

+

st.code("""

|

| 63 |

+

+----------------+------------------------+----------------------------------+

|

| 64 |

+

| Source | File Type | Column |

|

| 65 |

+

+----------------+------------------------+----------------------------------+

|

| 66 |

+

| Scopus | Comma-separated values | Choose your preferred column |

|

| 67 |

+

| | (.csv) | that you have to analyze and |

|

| 68 |

+

+----------------+------------------------| and need a column called "Year" |

|

| 69 |

+

| Web of Science | Tab delimited file | |

|

| 70 |

+

| | (.txt) | |

|

| 71 |

+

+----------------+------------------------| |

|

| 72 |

+

| Lens.org | Comma-separated values | |

|

| 73 |

+

| | (.csv) | |

|

| 74 |

+

+----------------+------------------------| |

|

| 75 |

+

| Other | .csv | |

|

| 76 |

+

+----------------+------------------------| |

|

| 77 |

+

| Hathitrust | .json | |

|

| 78 |

+

+----------------+------------------------+----------------------------------+

|

| 79 |

+

""", language=None)

|

| 80 |

+

|

| 81 |

+

with tab4:

|

| 82 |

+

st.subheader(':blue[Shifterator]', anchor=False)

|

| 83 |

+

st.write("Right-click visualization and click \"Save image as\" ")

|

| 84 |

+

|

| 85 |

st.header("Shifterator", anchor=False)

|

| 86 |

st.subheader('Put your file here...', anchor=False)

|

| 87 |

|

pages/9 Summarization.py

CHANGED

|

@@ -40,7 +40,39 @@ with st.popover("🔗 Menu"):

|

|

| 40 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 41 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 42 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 43 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

st.header("Summarization", anchor=False)

|

| 45 |

st.subheader('Put your file here...', anchor=False)

|

| 46 |

|

|

|

|

| 40 |

st.page_link("pages/8 Shifterator.py", label="Shifterator", icon="8️⃣")

|

| 41 |

st.page_link("pages/9 Summarization.py", label = "Summarization",icon ="9️⃣")

|

| 42 |

st.page_link("pages/10 WordCloud.py", label = "WordCloud", icon = "🔟")

|

| 43 |

+

|

| 44 |

+

with st.expander("Before you start", expanded = True):

|

| 45 |

+

tab1, tab2, tab3, tab4 = st.tabs(["Prologue", "Steps", "Requirements", "Download Visualization"])

|

| 46 |

+

with tab1:

|

| 47 |

+

st.write("")

|

| 48 |

+

|

| 49 |

+

with tab2:

|

| 50 |

+

st.text("1. Put your file. Choose your preferred column to analyze (if CSV).")

|

| 51 |

+

st.text("2. Choose your preferred method to count the words and decide how many top words you want to include or remove.")

|

| 52 |

+

st.text("3. Finally, you can visualize your data.")

|

| 53 |

+

st.error("This app includes lemmatization and stopwords. Currently, we only offer English words.", icon="💬")

|

| 54 |

+

|

| 55 |

+

with tab3:

|

| 56 |

+

st.code("""

|

| 57 |

+

+----------------+------------------------+

|

| 58 |

+

| Source | File Type |

|

| 59 |

+

+----------------+------------------------+

|

| 60 |

+

| Scopus | Comma-separated values |

|

| 61 |

+

| | (.csv) |

|

| 62 |

+

+----------------+------------------------|

|

| 63 |

+

| Lens.org | Comma-separated values |

|

| 64 |

+

| | (.csv) |

|

| 65 |

+

+----------------+------------------------|

|

| 66 |

+

| Other | .csv/ .txt(full text) |

|

| 67 |

+

+----------------+------------------------|

|

| 68 |

+

| Hathitrust | .json |

|

| 69 |

+

+----------------+------------------------+

|

| 70 |

+

""", language=None)

|

| 71 |

+

|

| 72 |

+

with tab4:

|

| 73 |

+

st.subheader(':blue[Summarization]', anchor=False)

|

| 74 |

+

st.write("Click \"Download Results\" button")

|

| 75 |

+

|

| 76 |

st.header("Summarization", anchor=False)

|

| 77 |

st.subheader('Put your file here...', anchor=False)

|

| 78 |

|